Multi-exposure fusion for high dynamic range scene①

Shen Xiaohe (申小禾), Liu Jinghong

(*Changchun Institute of Optics Fine Mechanics and Physics, Chinese Academy of Sciences, Changchun 130033, P.R.China) (**University of Chinese Academy of Sciences, Beijing 100049, P.R.China) (***Beijing Institute of Aerospace Control Devices, Beijing 100039, P.R.China)

Multi-exposure fusion for high dynamic range scene①

Shen Xiaohe (申小禾)②, Liu Jinghong*

(*Changchun Institute of Optics Fine Mechanics and Physics, Chinese Academy of Sciences, Changchun 130033, P.R.China) (**University of Chinese Academy of Sciences, Beijing 100049, P.R.China) (***Beijing Institute of Aerospace Control Devices, Beijing 100039, P.R.China)

①Supported by the National Natural Science Foundation of China (No. 61308099, 61304032).

②To whom correspondence should be addressed. E-mail: shenxiaohe2008@163.com Received on Mar. 20, 2017******

Due to the existing limited dynamic range a camera cannot reveal all the details in a high-dynamic range scene. In order to solve this problem, this paper presents a multi-exposure fusion method for getting high quality images in high dynamic range scene. First, a set of multi-exposure images is obtained by multiple exposures in a same scene and their brightness condition is analyzed. Then, multi-exposure images under the same scene are decomposed using dual-tree complex wavelet transform (DT-CWT), and their low and high frequency components are obtained. Weight maps according to the brightness condition are assigned to the low components for fusion. Maximizing the region Sum Modified-Laplacian (SML) is adopted for high-frequency components fusing. Finally, the fused image is acquired by subjecting the low and high frequency coefficients to inverse DT-CWT. Experimental results show that the proposed approach generates high quality results with uniform distributed brightness and rich details. The proposed method is efficient and robust in varies scenes.

multi-exposure fusion, high dynamic range scene, dual-tree complex wavelet transform (DT-CWT), brightness analysis

0 Introduction

The dynamic range of digital cameras is usually at 3 orders of magnitude, while the dynamic range of the nature world can be up to 9 orders of the magnitude. When the dynamic range of the nature scene exceeds that of a camera, all details in the scene are difficult to show in one image. By adjusting shutter speed and aperture[1], some regions of the image are always overexposed or underexposed. Exposure fusion is a solution to this problem, which fuses well-exposed regions of input images into a new image. This method can generate satisfactory results.

At present, many image fusion methods have been proposed. Exposure fusion methods include spatial domain method[2-5]and multi-resolution analysis method[6]. Spatial domain method usually evaluates the exposure condition by analyzing the features of blocks and pixels, such as image entropy, gradient, saturation, and brightness. Li, et al.[2]estimated the weight maps by measuring local contrast, brightness and color dissimilarity and then using recursive filter for refining the weight maps. Paul, et al.[5]proposed a multi-exposure fusion method in gradient domain. At present, spatial domain method is wildly used in multi-exposure fusion. Multi-resolution analysis method decomposes the image to different matrices at different scales. Thus it has demonstrated good performance in detail processing. Mertens, et al.[6]decomposed input images using Laplacian pyramids and chose the weight for every image through saturation, contrast, and well exposedness. Gaussian pyramids are constructed for the weight maps. The Laplacian and Gaussian pyramids are blended at each level and the final result is calculated.

The existing multi-exposure fusion methods don’t research on the relationship between the overall intensity of an image and the exposure condition of every pixel in this image. In this paper, brightness condition of a multi-exposure sequence is analyzed. The dual-tree complex wavelet transform (DT-CWT)[7,8]is employed for decomposing images into low and high frequency components. Low frequency components reflect the main energy and intensity; therefore, the weight maps for them are based on the brightness analysis. High frequency components reflect image details and Sum Modified-Laplacian (SML) reflects image details and definition[9]. Thus, maximum region SML is adopted for high frequency fusion. Experimental results demonstrate that the proposed method has a good performance with high definition and suitable brightness.

This paper includes 4 sections. In Section 1, the characters of brightness in a multi-exposure sequence is analyzed. In Section 2, the proposed exposure fusion method is explained in detail. Experimental results and comparison are discussed in Section 3. Finally, conclusion is provided in Section 4.

1 Brightness analysis

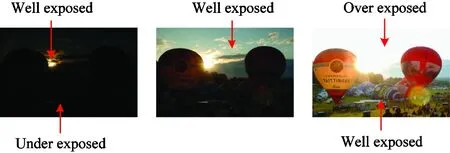

Every image of a multi-exposure sequence contains pixels with appropriate exposure. Otherwise, the image is not suitable for fusion. In an image with low brightness, pixels that have high intensities are well exposed; likewise, in an image with high brightness, the pixels that have low intensities are well exposed. Fig.1 demonstrates such a phenomenon.

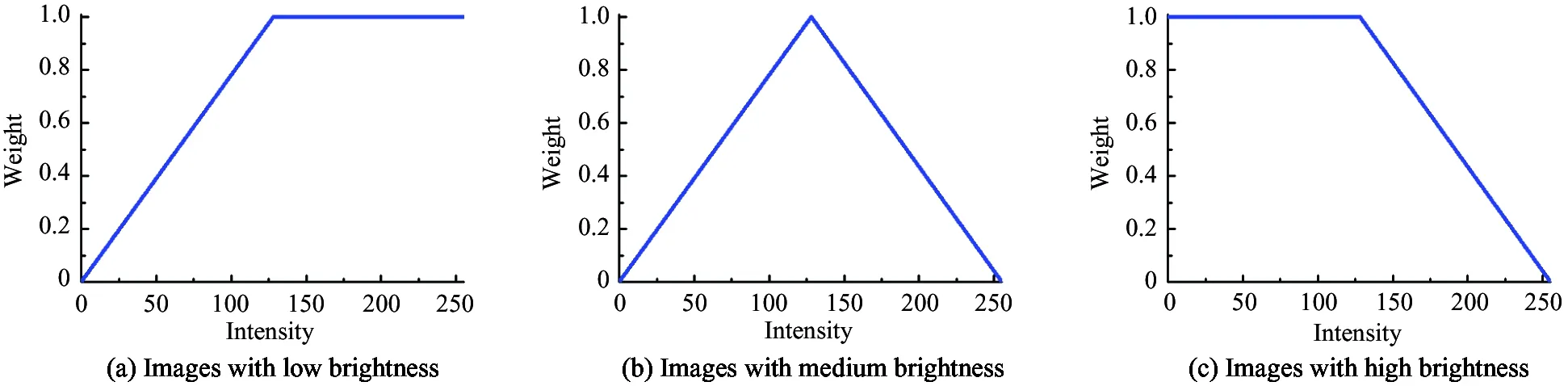

The purpose of multi-exposure fusion is fusing the appropriately exposed regions of all images into one.The overexposed or underexposed regions should beconsidered with a less weight than that of the well-exposed regions. The well-exposed pixels should have greater weight than other pixels. In this paper, functions that measure the relation between intensity and weight are established which are shown in Fig.2.

Fig.1 A multi-exposure sequence

Two thresholds pland phare set for estimating the brightness. In one image, if more than half of the pixels have values lower than pl, it is considered that it is an image with low brightness. Similarly, if more than half of the pixels have values greater than ph, it is regarded as an image with high brightness. Otherwise, it is assumed that it is an image with medium brightness. In this paper, pland phare set to 64 and 196, respectively.

Fig.2 Weight functions

2 Multi-exposure fusion method

It is assumed that a multi-exposure sequence has registered and the camera does not move. Input multi-exposure sequence is decomposed using the DT-CWT. The R, G, and B channels are decomposed respectively. The DT-CWT of a two-dimensional image I(i, j) which is formulated using scaling function and the complex wavelet function is shown as

(1)

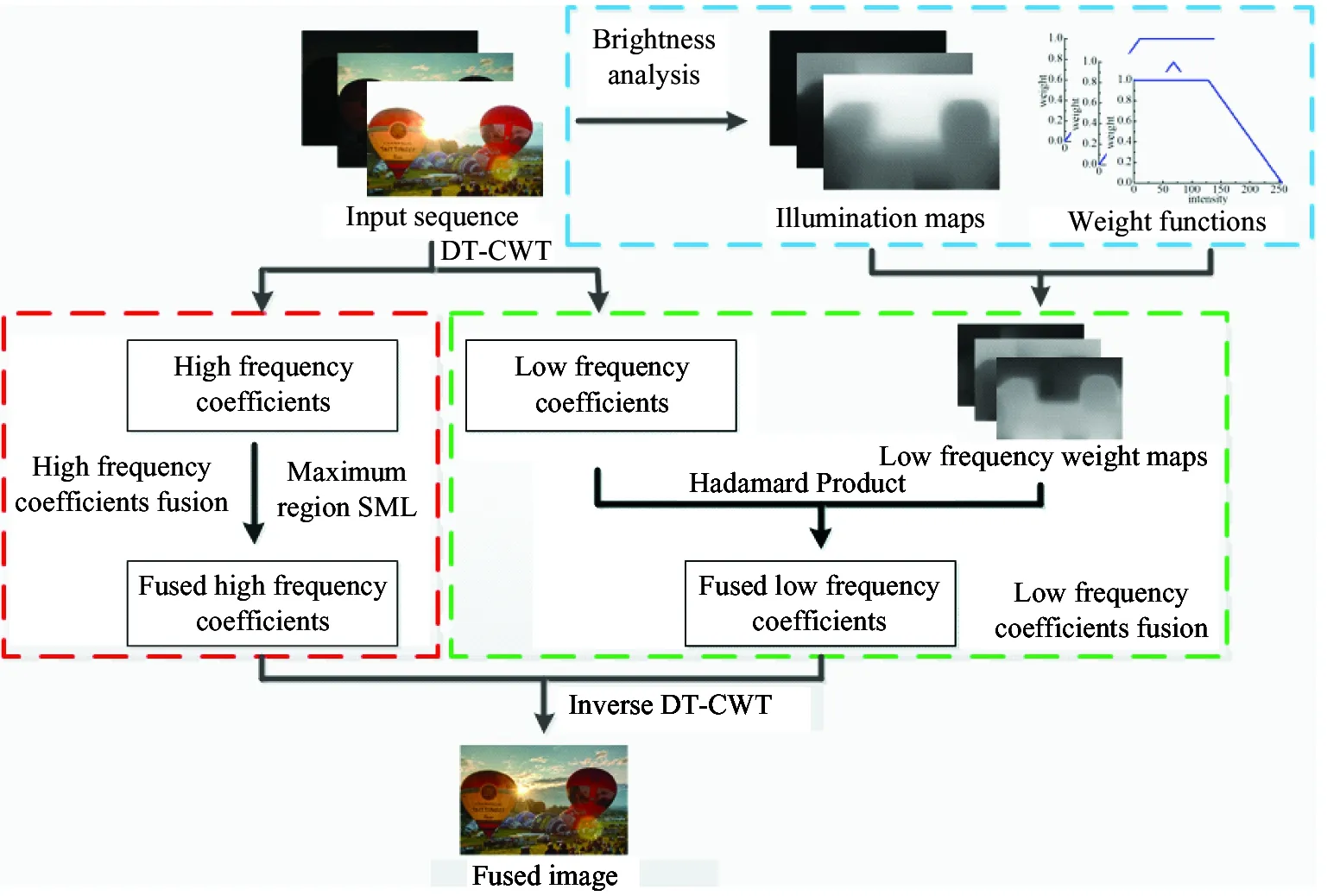

Fig.3 gives the algorithm flowchart. First, the input sequence is decomposed into high and low frequency coefficients by DT-CWT. Then the illumination of each input image is estimated to generate an illumination map, and a weight function is chosen accordingly. The box demonstrates the low frequency coefficients fusion which shall be introduced in Section 2.1. High frequency coefficients fusion shown in the other box is explained in Section 2.2. The final fusion result is obtained from the fused high frequency coefficients and the fused low frequency coefficients using the inverse DT-CWT.

Fig.3 Algorithm flowchart

2.1 Low frequency coefficients fusion

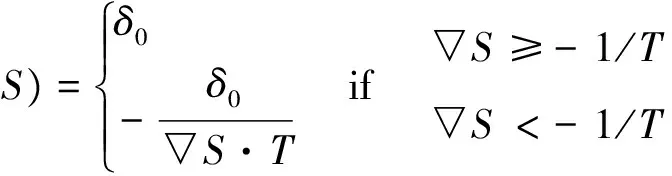

The fusion rules for low frequency coefficients should focus on the overall intensity rather than each single pixel. Therefore, in this study, the illumination of every image is estimated first and then the weights are distributed accordingly. The envelope filter[10]is adopted for illumination estimation because it eliminates the weak fluctuations of sudden changes, meanwhile retains their main trends. Eq.(2) expresses the envolpe filter, where parameter δ is assigned according to Eq.(3).

·Sv+1,Sv}

(2)

(3)

Based on the filtering results, the estimated illumination maps {Ek}(k=1,2, …, K, K is the number of input images) are obtained. For example, Fig.4 shows the estimated illumination maps of Fig.1.

Fig.4 Estimated illumination maps of Fig.1

(4)

where (i, j) indicates the matrix coordinate of weight maps. Fig.5 displays the final weight maps for low frequency of Fig.1.

Fig.5 Low frequency weight maps of Fig.1

(5)

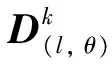

2.2 High frequency coefficients fusion

High frequency coefficients reflect image details like edge and texture. The higher the high frequency coefficient is, the more obvious the illumination changes are and the richer the edges and textures are. Sum Modified-Laplacian reflects image details and definition correctly. Compared with other measurements of definition, SML has better ability to distinguish definition. SML also performs excellently for image fusion. In order to get results with better visual effect and extensive details, “Maximum region SML” is adopted for high frequency coefficients fusion.

呼倫洗過澡,上了床,云夢仍然守在客廳里陪母親看電視,咯咯答的聲音不斷刺激著呼倫的耳膜,躺在床上的呼倫不是在翻身而是在打滾。呼倫去了一趟洗手間,倒了兩杯熱水,云夢才理會了他溫柔多情的用意。一集電視劇完畢,云夢戀戀不舍地走進臥室,關門上床。

(6)

where (i, j) indicates the coordinate.*means Hadamard product. Lxand Lyindicate second-order difference operator at x-direction and y-direction, which are shown in

(7)

(8)

(9)

(10)

3 Experiment and discussion

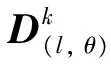

Fig.6(a) and (b) show the multi-exposure sequence “Desk” with the size of 780×520. Fig.5(c)~(g) show the fusion results using methods proposed by Refs[2-6]. The result is shown in Fig.6(h). In Fig.6(d) and (e), there exists a black shadow at the right upper corner. This phenomenon exists in Fig.6(c), but not serious. At the right upper corner in the source images is wall space, and it should not be black. Fig.6(d) and (e) perform inaccurately in terms of local brightness. Fig.6(g) is darker than other results. The result shows that the intensity is appropriate and suitable for human vision.

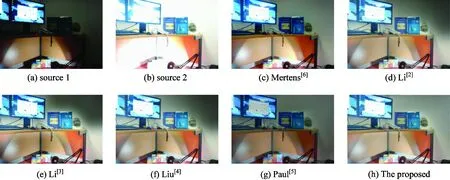

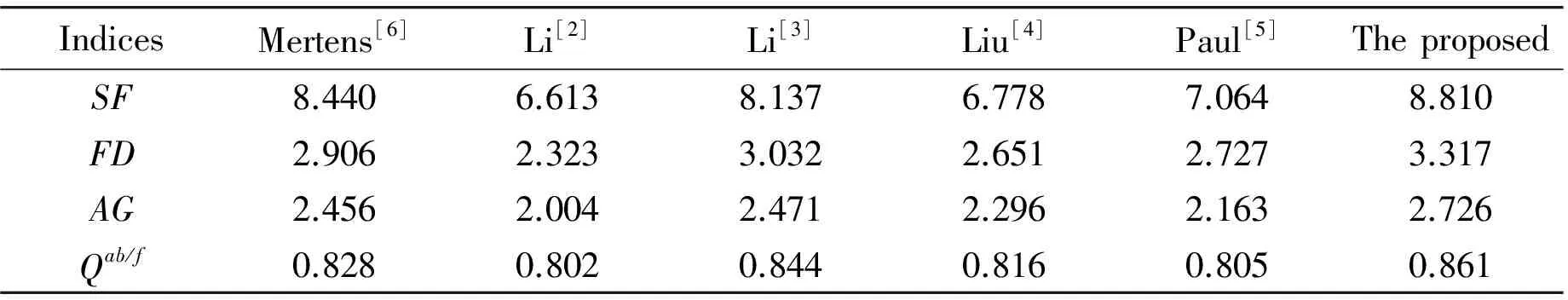

In order to evaluate the proposed algorithm in this paper objectively, spatial frequency (SF), figure definition (FD), average gradient (AG), and edge information transferred from source images to the fused image (Qab/f)[11]are adopted for evaluating the performance of different exposure fusion methods.

Spatial frequency (SF) is the time of repeated changes in the image function per unit length. SF reflects the change characteristics in the spatial domain and the sharpness of the fused image. Figure definition (FD) reflects the clarity of images. This paper adopts rate of gray value for measure figure definition. Average gradient (AG) is the average gray gradient of an image. AG measures the features of small details, namely, contrast and texture change of the fused image. SF, FD and AG are image quality evaluation metrics and they measure the fused image quality. For SF, FD and AG, a larger value indicates a better result. Qab/fcalculates how much edge information is transferred from the input images to the fused image. Qab/fis used to measure the performance of fusion algorithm used to from the perspective of similarity. It generates a quality value that ranges from 0 to 1, and a higher value indicates better quality.

Fig.6 “Desk” sequence and its exposure fusion results using 6 different methods Table 1 Objective evaluation metrics of “Desk” sequence

IndicesMertens[6]Li[2]Li[3]Liu[4]Paul[5]TheproposedSF20.30817.96322.16218.50912.96622.723FD5.7855.1316.2595.2774.1096.752AG4.3873.9634.8374.0793.1085.042Qab/f0.8790.8630.8880.8690.6400.902

Fig.7 “Candle” sequence and its exposure fusion results using 6 different methods

Fig.7(a) shows the multi-exposure sequence “Candle”, and Fig.7(b)~(g) display the results using the 6 methods. The image size is 512×364. In Fig.7(b)~(e), the brightness of the wall around the window is non-uniform and inappropriate, especially in Fig.7(d). In Fig.7(f), the global brightness is darker too and the proposed result has a good visibility.

The objective evaluation metrics of Fig.7 is shown in Table 2. As can be seen, the results have the best SF, FD and AG and Qab/f.

Table 2 Objective evaluation metrics of “Candle” sequence

The proposed algorithm is widely used for various multi-exposure sequences and can obtain satisfying results. More fusion results are shown in Fig.8. The proposed multi-exposure fusion method has a good robustness in various scenes.

Fig.8 Results of different image sequences

4 Conclusion

A multi-exposure fusion algorithm is proposed for high dynamic range scene. The multi-exposure images are decomposed into low and high frequency component by DT-CWT. 3 different weight functions depending on brightness analysis of the images are then proposed for low frequency coefficients fusion, which generate fusion results with suitable brightness. For high frequency coefficients fusion, maximizing region Sum Modified-Laplacian is adopted. Thus, the image details are preserved effectively. Experiments demonstrate that the proposed algorithm generates high-quality images in various scenes. The obtained images have suitable overall brightness and preserve details and edges effectively. The proposed algorithm is efficient and robust in varies scenes.

[ 1] Shen X H, Liu J H, Chu G S. Auto exposure algorithm for aerial camera based on histogram statistics method. Journal of Electronics & Information Technology, 2016, 38(3): 541-548

[ 2] Li S T, Kang X D. Fast multi-exposure image fusion with median filter and recursive filter. IEEE Transactions on Consumer Electronics, 2012, 58(2): 626-632

[ 3] Li S T, Kang X D, Hu J. Image fusion with guided filtering. IEEE Transactions on Image Process, 2013, 22(7): 2864-2875

[ 4] Liu Y, Wang Z F. Dense SIFT for ghost-free multi-exposure fusion. Journal of Visual Communication and Image Representation, 2015, 31(C): 208-224

[ 5] Paul S, Sevcenco S I, Agathoklis P. Multi-exposure and Multi-Focus Image Fusion in Gradient Domain. Journal of Circuits, Systems and Computers, 2016, 16(6): 123-131

[ 6] Mertens T, Kautz J, Reeth F V. Exposure fusion: a simple and practical alternative to high dynamic range photography. Computer Graphics Forum, 2009, 28(1): 161-171

[ 7] Kingsbury N. The Dual-tree complex wavelet transform: A new efficient tool for image restoration and enhancement. In: Proceeding of the 9th European Signal Processing Conference, Island of Rhodes, Greece, 1998. 1-4

[ 8] Selesnick I W, Baraniuk R G, Kingsbury N. The dual-tree complex wavelet transform. IEEE Signal Processing Magazine, 2005, 22(6): 123-151

[ 9] Zhao H, Li Q, Feng H. Multi-focus color image fusion in the HSI space using the sum-modified-laplacian and a coarse edge map. Image & Vision Computing, 2008, 26(9):1285-1295

[10] Shaked D, Keshet R. Robust recursive envelope operators for fast Retinex, HPL-2002-74(R.1). Haifa: Hewlett Packard Research Laboratories, 2004

[11] Petrovic V, Xydeas C S. Objective image fusion performance characterisation. In: Proceedings of the 10th IEEE International Conference on Computer Vision, Beijing, China, 2005. 17-21

10.3772/j.issn.1006-6748.2017.04.001

the Ph.D degree from the University of the Chinese Academy of Sciences in 2017. She

the B.S. degree in applied physics from Jilin University in 2012. She works in Beijing Institute of Aerospace Control Devices now. Her current research interests include automatic exposure control and exposure fusion.

High Technology Letters2017年4期

High Technology Letters2017年4期

- High Technology Letters的其它文章

- A novel conditional diagnosability algorithm under the PMC model①

- Novel differential evolution algorithm with spatial evolution rules①

- Temperature field analysis of two rotating and squeezing steel-rubber rollers①

- First order sensitivity analysis of magnetorheological fluid damper based on the output damping force①

- Studies on China graphene research based on the analysis of National Science and Technology Reports①

- Operation of the main steam inlet and outlet interface pipe of a nuclear power station①