基于支持向量機(jī)的玉米根茬行圖像分割

王春雷,盧彩云,李洪文,何 進(jìn),王慶杰,江 珊

·農(nóng)業(yè)信息與電氣技術(shù)·

基于支持向量機(jī)的玉米根茬行圖像分割

王春雷,盧彩云,李洪文※,何 進(jìn),王慶杰,江 珊

(1. 中國(guó)農(nóng)業(yè)大學(xué)工學(xué)院,北京 100083;2. 農(nóng)業(yè)農(nóng)村部河北北部耕地保育科學(xué)觀測(cè)實(shí)驗(yàn)站,北京 100083)

玉米根茬行的準(zhǔn)確識(shí)別是實(shí)現(xiàn)玉麥輪作機(jī)器視覺式小麥自動(dòng)對(duì)行免耕播種技術(shù)的前提。針對(duì)華北一年兩熟區(qū)聯(lián)合收獲機(jī)玉米留茬收獲后根茬行較難準(zhǔn)確分割的問題,該研究以直立玉米根茬為研究對(duì)象,提出一種基于支持向量機(jī)(Support Vector Machine,SVM)的玉米根茬行分割方法。首先,利用主成分分析(Principal Components Analysis,PCA)對(duì)提取的目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)的顏色和紋理特征進(jìn)行分析,優(yōu)選出21個(gè)特征,構(gòu)成特征向量作為訓(xùn)練直立根茬SVM識(shí)別模型的輸入;然后,根據(jù)圖像坐標(biāo)設(shè)置圖像中間包含完整玉米根茬行的矩形區(qū)域?yàn)楦信d趣區(qū)域(Region of Interest,ROI);最后,使用訓(xùn)練好的直立根茬SVM識(shí)別模型以25×25(像素)的窗口在ROI內(nèi)滑動(dòng)檢測(cè),采用閾值法分割根茬行并通過形態(tài)學(xué)處理優(yōu)化得到最終的玉米根茬行二值圖像。利用在農(nóng)業(yè)農(nóng)村部河北北部耕地保育農(nóng)業(yè)科學(xué)觀測(cè)實(shí)驗(yàn)站采集的100幅玉米根茬行圖像進(jìn)行試驗(yàn),結(jié)果表明,本文方法對(duì)于不同行間秸稈覆蓋量和不同光照條件下的根茬行分割表現(xiàn)出較好的準(zhǔn)確性和魯棒性,直立根茬平均識(shí)別準(zhǔn)確率、平均分割準(zhǔn)確率、平均召回率、平均分割準(zhǔn)確率與平均召回率的加權(quán)調(diào)和平均值(F1值)分別為93.8%、93.72%、92.35%和93.03%,每幅圖像的平均分割時(shí)間為0.06 s,具有較好的實(shí)時(shí)性。基于SVM的分割方法可實(shí)現(xiàn)聯(lián)合收獲機(jī)玉米留茬收獲后根茬行圖像的分割,為下一步檢測(cè)玉米根茬行直線并將其作為導(dǎo)航基準(zhǔn)線進(jìn)行視覺導(dǎo)航的研究提供良好基礎(chǔ)。

機(jī)器視覺;圖像分割;支持向量機(jī);主成分分析;玉米根茬行

0 引 言

華北一年兩熟區(qū)是中國(guó)主要糧食生產(chǎn)基地,保持該區(qū)域糧食的穩(wěn)產(chǎn)高產(chǎn),對(duì)保障國(guó)家糧食安全具有重要意義[1]。免耕播種在秸稈留茬地直接進(jìn)行播種作業(yè),利用作物秸稈及殘茬覆蓋地表,具有節(jié)水抗旱、提高土壤有機(jī)質(zhì)含量、增加作物產(chǎn)量的優(yōu)勢(shì),對(duì)促進(jìn)糧食穩(wěn)產(chǎn)高產(chǎn)有重要作用[2-3]。然而,由于地表有秸稈殘茬覆蓋,易造成免耕播種機(jī)具堵塞,嚴(yán)重影響播種質(zhì)量,故提升機(jī)具通過性能成為免耕播種技術(shù)實(shí)施的關(guān)鍵[4-5]。

機(jī)器視覺式小麥自動(dòng)對(duì)行免耕播種技術(shù)通過機(jī)器視覺檢測(cè)玉米根茬行位置,進(jìn)而引導(dǎo)小麥對(duì)行免耕播種機(jī)自動(dòng)避開玉米根茬進(jìn)行作業(yè),既可提高免耕播種機(jī)的通過性,發(fā)揮免耕播種優(yōu)勢(shì),同時(shí)可降低駕駛員作業(yè)強(qiáng)度,提高作業(yè)效率及播種質(zhì)量,對(duì)推動(dòng)智能化保護(hù)性耕作技術(shù)的發(fā)展具有重要意義[6]。玉米根茬行識(shí)別是玉麥輪作機(jī)器視覺式小麥自動(dòng)對(duì)行免耕播種技術(shù)實(shí)現(xiàn)的前提,其識(shí)別性能的好壞直接影響自動(dòng)對(duì)行的精度及播種質(zhì)量。為提高根茬行識(shí)別性能,研究人員針對(duì)不同田間環(huán)境下的根茬行開展了識(shí)別研究,如陳媛[7]以玉米高留茬收獲且行間無秸稈覆蓋條件下的玉米根茬為研究對(duì)象,提出了一種基于迭代法選取閾值的根茬行分割法,該方法可實(shí)現(xiàn)根茬行的有效分割,處理一幅640×480(像素)的彩色圖像耗時(shí)135 ms,但在行間有秸稈覆蓋時(shí)分割效果不佳。陳婉芝[8]以玉米留茬收獲且行間有秸稈覆蓋的根茬行為研究對(duì)象,提出一種基于遺傳算法與閾值濾噪的根茬行分割法,該方法處理一幅1 280×1 024(像素)的圖像耗時(shí)160 ms,相對(duì)面積誤差率為24.68%,但準(zhǔn)確性、實(shí)時(shí)性及魯棒性還有待提高。

與其他作物相比,玉米利用聯(lián)合收獲機(jī)留茬收獲后的根茬行圖像具有目標(biāo)(直立根茬)與圖像背景(行間秸稈及裸露地表)顏色相近、行間秸稈形態(tài)參差不齊、秸稈覆蓋量及光照變化大等特點(diǎn)。因此,針對(duì)直立根茬在上述背景下較難準(zhǔn)確分割的問題,本文以聯(lián)合收獲機(jī)留茬收獲后的玉米直立根茬為研究對(duì)象,提出一種基于支持向量機(jī)的玉米根茬行分割方法,以期為根茬行分割及后續(xù)根茬行快速檢測(cè)提供參考。

1 圖像采集

玉米根茬行圖像于2019年10月初在農(nóng)業(yè)農(nóng)村部河北北部耕地保育農(nóng)業(yè)科學(xué)觀測(cè)實(shí)驗(yàn)站采集,玉米根茬高度約30~40 cm,采集設(shè)備是Nikon D5100型數(shù)碼相機(jī),拍攝時(shí)數(shù)碼相機(jī)正對(duì)玉米根茬行,相機(jī)距地面高度約1 m,與水平方向的夾角約30°。為保證根茬行圖像樣本的多樣性,隨機(jī)選取4塊拍攝區(qū)域,人工分別設(shè)置0、1、2和3 kg/m2的行間秸稈覆蓋量,并針對(duì)4種行間秸稈覆蓋量下的根茬行進(jìn)行圖像采集,采集時(shí)間在8:00—18:00之間,包含晴天條件下的順光、陽光直射、逆光,以及陰天。對(duì)于行間秸稈覆蓋量為0的條件,地表含水率會(huì)隨時(shí)間及天氣變化而變化,因此地表圖像會(huì)呈現(xiàn)出不同的顏色及紋理信息,進(jìn)而保證了圖像樣本的多樣性。共采集圖像400張,4種行間秸稈覆蓋量各100張,圖像分辨率為4 928×3 264(像素)。試驗(yàn)各從4種行間秸稈覆蓋量條件的根茬行圖像中各隨機(jī)選取75幅,共300幅圖像用于獲取目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)樣本圖像并作為訓(xùn)練樣本,剩余100幅圖像作為測(cè)試樣本。為了提高直立根茬識(shí)別模型的訓(xùn)練速度和識(shí)別精度,以及后續(xù)根茬行的分割性能,結(jié)合圖像分割、視覺導(dǎo)航等相關(guān)研究[9-10],本文將所采集圖像尺寸調(diào)整為640×480(像素)。

2 基于SVM的玉米根茬行分割方法

基于支持向量機(jī)(Support Vector Machine,SVM)的玉米根茬行分割方法主要分為3個(gè)步驟,如圖1所示。首先,利用訓(xùn)練樣本獲取目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)的樣本圖像,提取其在不同顏色空間下的顏色及紋理特征,并采用主成分分析法優(yōu)選出最有利于區(qū)分二者的特征并進(jìn)行直立根茬SVM識(shí)別模型訓(xùn)練;然后進(jìn)行感興趣區(qū)域選取。為提高分割效率,將能夠包含圖像中間完整玉米根茬行的矩形區(qū)域作為感興趣區(qū)域(Region of Interest,ROI);最后根茬行分割。利用直立根茬SVM識(shí)別模型在ROI內(nèi)進(jìn)行窗口滑動(dòng)檢測(cè)識(shí)別直立根茬,采用閾值法分割根茬行并通過形態(tài)學(xué)處理得到最終的玉米根茬行二值圖像。

2.1 特征提取及直立根茬SVM識(shí)別模型訓(xùn)練

為了訓(xùn)練直立根茬識(shí)別模型,需要提取目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)的特征作為圖像的特征描述子。而玉米直立根茬與行間秸稈及裸露地表顏色接近,肉眼較難區(qū)分。為尋找利于區(qū)分二者的特征,以25×25(像素)的窗口獲取直立根茬樣本1 500個(gè),行間秸稈及裸露地表樣本各1 500個(gè),其中直立根茬樣本在寬度上包含完整的直立根茬輪廓,且涵蓋不同的光照和秸稈覆蓋量條件;行間秸稈樣本包含不同的光照、行間秸稈覆蓋量及形態(tài)等條件;裸露地表樣本包含不同的光照、形態(tài)等條件。通過提取目標(biāo)與背景圖像的顏色與紋理特征,構(gòu)建高維特征向量,并采用主成分分析法對(duì)高維特征向量進(jìn)行分析,優(yōu)選出最有利于區(qū)分二者的特征。

此外,合理的顏色空間選擇不僅有利于直立根茬顏色特征表達(dá),同時(shí)能降低光照變化及復(fù)雜環(huán)境對(duì)分割效果的影響。本文選取了RGB、HSV、Lab、YCbCr和YIQ 共5種常用的顏色空間,并選擇R、G、B、H、S、V、L、a、b、YCbCr-Y、Cb、Cr、YIQ-Y、I、Q、(R +G +B)/3、-0.74R+0.98G+0.875B[8]共17個(gè)分量用于后續(xù)顏色與紋理特征的提取。

2.1.1 顏色特征提取

基于上述17個(gè)分量提取的顏色特征共697個(gè),其中每個(gè)分量下提取的特征主要包括均值、方差、最大值、最小值、二階矩、三階矩[11]、協(xié)方差均值、協(xié)方差方差、協(xié)方差最大值、協(xié)方差行向標(biāo)準(zhǔn)差最大值、協(xié)方差列向標(biāo)準(zhǔn)差最大值、協(xié)方差行向標(biāo)準(zhǔn)差均值、協(xié)方差列向標(biāo)準(zhǔn)差均值、協(xié)方差對(duì)角化均值、協(xié)方差對(duì)角化最大值、協(xié)方差對(duì)角化最小值等41個(gè)特征。該研究中每個(gè)值的計(jì)算順序與該值的名稱一致,如列向標(biāo)準(zhǔn)差均值即先按列計(jì)算標(biāo)準(zhǔn)差然后再計(jì)算標(biāo)準(zhǔn)差的均值。

2.1.2 紋理特征提取

基于上述17個(gè)分量提取的紋理特征共1 513個(gè),每個(gè)分量下提取的特征有89個(gè):基于灰度共生矩陣分別提取0°、45°、90°、135° 共4個(gè)方向下的角二階矩、熵、慣性矩、對(duì)比度、逆方差等共40個(gè)特征[12-14];基于偏度值和峰度值分別提取二者的均值、最大值、最小值、方差、協(xié)方差等共14個(gè)特征[15-17];基于Tamura原理,通過設(shè)置不同參數(shù)提取粗糙度、對(duì)比度、方向度、線像度等共23個(gè)特征[18-20];基于方向梯度直方圖的梯度幅值和梯度方向值,分別提取二者的均值、最大值、最小值和標(biāo)準(zhǔn)差共8個(gè)特征[21-22];基于快速傅里葉變換提取頻譜的均值、最大值、最小值和標(biāo)準(zhǔn)差4個(gè)特征[23]。

2.1.3 特征優(yōu)選

主成分分析法(Principal Component Analysis,PCA)是一種廣泛應(yīng)用的數(shù)據(jù)降維及特征優(yōu)選方法,其基本原理是通過正交變換將一組線性相關(guān)的變量轉(zhuǎn)換為線性不相關(guān)的變量,轉(zhuǎn)換后的變量稱作主成分[24-27]。本研究采用PCA對(duì)提取的顏色及紋理特征進(jìn)行分析,并根據(jù)主成分方差貢獻(xiàn)率及各原始特征的權(quán)重系數(shù)進(jìn)行特征優(yōu)選。

從獲取的目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)樣本中共得到697個(gè)顏色特征和1 513個(gè)紋理特征,每個(gè)特征包含4 500個(gè)樣本數(shù)據(jù)。首先,通過人工篩選剔除420個(gè)存在空值的特征,并將剩余特征構(gòu)成1 790維特征向量。通過PCA對(duì)該特征向量進(jìn)行分析,結(jié)果表明前2個(gè)主成分的累積方差貢獻(xiàn)率為99.99%,且第1主成分的方差貢獻(xiàn)率已達(dá)到98.2%,故選取第1主成分進(jìn)一步分析。由于大部分權(quán)重系數(shù)值較小,故對(duì)權(quán)重系數(shù)值進(jìn)行取對(duì)數(shù)(lg)處理,得到第1主成分中各個(gè)特征的權(quán)重系數(shù)對(duì)數(shù)值變化曲線(圖2)。

根據(jù)權(quán)重系數(shù)對(duì)數(shù)曲線分布,綜合考慮模型的識(shí)別準(zhǔn)確率和效率,選取權(quán)重系數(shù)對(duì)數(shù)值大于-6.35的前21個(gè)特征(表1)為最優(yōu)特征,構(gòu)成21維特征向量作為訓(xùn)練直立根茬SVM識(shí)別模型的輸入。

2.1.4 直立根茬SVM識(shí)別模型訓(xùn)練

支持向量機(jī)(Support Vector Machine,SVM)是經(jīng)典的有監(jiān)督機(jī)器學(xué)習(xí)方法之一,其能夠在樣本量小、非線性等情況下構(gòu)造出不同類別間的最大分類面,具有很強(qiáng)的泛化能力,較高的準(zhǔn)確率和效率[28-30]。

將優(yōu)選的21維特征向量作為直立根茬SVM識(shí)別模型的訓(xùn)練樣本輸入,訓(xùn)練標(biāo)簽是(-1,1)。其中規(guī)定直立根茬訓(xùn)練樣本的標(biāo)簽為(1),共有1 500個(gè);行間秸稈及裸露地表訓(xùn)練樣本的標(biāo)簽為(-1),共有3 000個(gè)。直立根茬SVM識(shí)別模型的訓(xùn)練目標(biāo)是找到能夠劃分直立根茬、行間秸稈及裸露地表樣本且使其間隔最大的超平面。此外,合理的核函數(shù)類型及其參數(shù)選擇對(duì)識(shí)別模型的性能有顯著影響,線性函數(shù)、多項(xiàng)式函數(shù)、Sigmoid函數(shù)及徑向基(Radial Basis Function,RBF)函數(shù)為4種常用的函數(shù)。經(jīng)多次試驗(yàn),選取識(shí)別準(zhǔn)確率較高的RBF函數(shù)作為直立根茬SVM識(shí)別模型的核函數(shù),考慮到直立根茬、行間秸稈及裸露地表樣本數(shù)為1∶2,因此基于網(wǎng)格搜索法利用5折分層交叉驗(yàn)證法獲取懲罰系數(shù)和的最優(yōu)取值。其中,的選擇范圍為2-10~210,的選擇范圍為2-10~210,在和組成的平面內(nèi)搜索,以訓(xùn)練集分層交叉驗(yàn)證準(zhǔn)確率均值達(dá)到最大值為指標(biāo)。本文最終選擇=72、=5為最優(yōu)參數(shù),此時(shí)模型的準(zhǔn)確率最高,為96.8%。

表1 選取的最優(yōu)特征及其權(quán)重系數(shù)對(duì)數(shù)值

注:L為L(zhǎng)ab顏色空間下的L分量;a為L(zhǎng)ab顏色空間下的a分量;b為L(zhǎng)ab顏色空間下的b分量;R為RGB顏色空間下的R分量;G為RGB顏色空間下的G分量;B為RGB顏色空間下的B分量;V為HSV顏色空間下的V分量;YIQ-Y為YIQ顏色空間下Y分量;YCbCr-Y為YcbCr顏色空間下Y分量。

Note: L is L component of Lab color space; a is a component of Lab color space; b is b component of Lab color space; R is R component of RGB color space; G is G component of RGB color space; B is B component of RGB color space; V is component of HSV color space; YIQ-Y is Y component of YIQ color space; YCbCr-Y is Y component of YCbCr color space.

2.2 玉米根茬行感興趣區(qū)域選取

玉米根茬行圖像分割的目標(biāo)是實(shí)現(xiàn)圖像中間完整玉米根茬行的分割,因此將包含該條根茬行的矩形區(qū)域作為ROI進(jìn)行處理。定義圖像左上角為圖像坐標(biāo)原點(diǎn),向右是軸正方向,大小為圖像寬度width,向下為軸正方向,大小為圖像高度height。從采集的圖像中隨機(jī)選取50幅圖像,手動(dòng)標(biāo)記根茬行所屬區(qū)域的坐標(biāo)范圍,并綜合考慮識(shí)別性能及分割效率,最終確定將玉米根茬行圖像中以(7width/16,0)和(19width/32,height)為對(duì)角點(diǎn)的矩形區(qū)域設(shè)置為ROI。

2.3 根茬行分割方法

選取Lab顏色空間下L分量作為待分割灰度圖像,并將L分量ROI區(qū)域外灰度值設(shè)為0,直立根茬滑動(dòng)識(shí)別及根茬行分割流程如圖3所示,具體步驟如下:

1)設(shè)置ROI左端為起始點(diǎn),以25×25(像素)的窗口在ROI內(nèi)由左向右,從上至下進(jìn)行滑動(dòng)檢測(cè);

2)提取當(dāng)前窗口內(nèi)前述21個(gè)特征的值構(gòu)成特征向量,然后使用直立根茬SVM識(shí)別模型進(jìn)行標(biāo)簽預(yù)測(cè),若標(biāo)簽預(yù)測(cè)為(-1),進(jìn)入步驟3),反之進(jìn)入步驟4);

3)將L分量中與當(dāng)前窗口處于相同位置的灰度值設(shè)為0,同時(shí)將當(dāng)前窗口向右步進(jìn)25個(gè)像素,向下步進(jìn)0個(gè)像素,并重復(fù)步驟2);

4)若當(dāng)前窗口預(yù)測(cè)標(biāo)簽為1,則確定其屬于直立根茬,將L分量中與當(dāng)前窗口處于相同位置的灰度值設(shè)為255(灰度取值范圍的最大值),以便后續(xù)對(duì)根茬行進(jìn)行分割,然后將窗口退回最左端,同時(shí)向下步進(jìn)25個(gè)像素塊,并重復(fù)步驟2);

5)重復(fù)步驟3)~4),直至識(shí)別完整個(gè)ROI;

完成步驟1)~5)后,直立根茬區(qū)域的灰度值已被設(shè)為255,故采用閾值為255的閾值法對(duì)L分量進(jìn)行分割,通過半徑為2的圓盤形結(jié)構(gòu)元素對(duì)分割后的二值圖像進(jìn)行形態(tài)學(xué)開運(yùn)算處理去除毛刺、孔洞等噪聲得到最終的玉米根茬行二值圖像。

3 基于SVM的玉米根茬行分割試驗(yàn)

3.1 試驗(yàn)環(huán)境

試驗(yàn)所用平臺(tái)為Hewlett-Packard(HP)計(jì)算機(jī),處理器為Intel(R) Core(TM) i7-9750H,12核2.60 GHz,32 GB RAM,顯卡為NVIDIA GeForce GTX 1650,操作系統(tǒng)為Windows 10,編程語言為Python 3.7,圖像處理及直立根茬SVM識(shí)別模型訓(xùn)練階段用到的計(jì)算機(jī)視覺開源庫(kù)包括numpy、OpenCV及sklearn。

3.2 玉米根茬行分割及評(píng)價(jià)方法

試驗(yàn)中,使用測(cè)試樣本進(jìn)行玉米根茬行分割,測(cè)試樣本為0、1、2和3 kg/m2共4種行間秸稈覆蓋量條件下的玉米根茬行圖像,每種條件各25幅圖像,共100幅圖像,每種包含晴天條件下的順光、陽光直射、逆光,陰天等條件,圖像分辨率為640×480(像素)。為了驗(yàn)證本文方法的可行性及其分割性能,選取反向傳播神經(jīng)網(wǎng)絡(luò)(Back Propagation Neural Network,BPNN)[31]、極限學(xué)習(xí)機(jī)[32](Extreme Learning Machine,ELM)進(jìn)行直立根茬識(shí)別模型訓(xùn)練,構(gòu)成BPNN分割法和ELM分割法,此外還選取了基于遺傳算法和閾值濾噪的分割方法[10](以下簡(jiǎn)稱遺傳法),將3種方法與本文分割方法的玉米根茬行分割效果進(jìn)行對(duì)比,處理過程中的一些典型分割結(jié)果如圖4~7所示。

其中,在參考機(jī)器學(xué)習(xí)、圖像處理等相關(guān)研究中BP神經(jīng)網(wǎng)絡(luò)與極限學(xué)習(xí)機(jī)主要參數(shù)的設(shè)置[31-33],在前期試驗(yàn)的基礎(chǔ)上確定本研究中BP神經(jīng)網(wǎng)絡(luò)采用4500-300-20-2 4層結(jié)構(gòu),激活函數(shù)為sigmoid函數(shù),學(xué)習(xí)速率為0.02,目標(biāo)誤差為0.01,迭代次數(shù)為10 000;極限學(xué)習(xí)機(jī)采用4500-500-2的3層結(jié)構(gòu),激活函數(shù)為sigmoid函數(shù);遺傳法灰度化算子為-0.74R+0.98G+ 0.875B,分割閾值、面積閾值和偏距閾值分別為150、100和30。

為客觀評(píng)價(jià)本文所提出方法的分割性能,采用Photoshop手動(dòng)分割玉米根茬行,并將該結(jié)果作為真實(shí)分割結(jié)果,選取直立根茬平均識(shí)別準(zhǔn)確率A、平均分割準(zhǔn)確率P、平均召回率R和平均分割準(zhǔn)確率與平均召回率的加權(quán)調(diào)和平均值(F1值)對(duì)不同方法的分割結(jié)果進(jìn)行定量評(píng)價(jià)[34]。其中,直立根茬平均識(shí)別準(zhǔn)確率A用于分析模型識(shí)別結(jié)果與真實(shí)結(jié)果之間的差異,計(jì)算方式如下:

式中C1為第幅圖像中直立根茬被正確識(shí)別的個(gè)數(shù);C2為第幅圖像中被誤識(shí)別為直立根茬的個(gè)數(shù)。

平均分割準(zhǔn)確率P用于體現(xiàn)算法分割結(jié)果與真實(shí)分割結(jié)果之間的差異,計(jì)算方式如下:

式中P1為第幅圖像中直立根茬被正確分割的像素?cái)?shù);P2為第幅圖像中被誤分割為直立根茬的像素?cái)?shù)。

平均召回率R用于評(píng)價(jià)分割算法處理玉米根茬行圖像后所得到根茬行圖像的完整性,計(jì)算方式如下:

式中P3為第幅圖像中直立根茬被誤分割為行間秸稈或裸露地表的像素?cái)?shù)。

F1值兼顧分割算法的準(zhǔn)確率與召回率,對(duì)分割結(jié)果做出綜合評(píng)價(jià),計(jì)算方式如下:

4 結(jié)果與分析

從4種方法的分割結(jié)果可以看出,在不同行間秸稈覆蓋量、不同光照條件下,BPNN分割法和ELM分割法的分割結(jié)果相似,2者雖然能夠分割根茬行圖像,但分割效果欠佳,存在嚴(yán)重的誤分割現(xiàn)象。推測(cè)BPNN分割法和ELM分割法的分割效果欠佳原因是:當(dāng)行間秸稈量為0時(shí),晴天順光、晴天陽光直射、晴天逆光條件下的根茬陰影,復(fù)雜地表(摻雜地表、細(xì)碎秸稈等),晴天陽光直射下的強(qiáng)光區(qū)域及陰天條件下的暗光區(qū)域等都會(huì)對(duì)直立根茬識(shí)別產(chǎn)生影響,導(dǎo)致行間秸稈或裸露地表預(yù)測(cè)為直立根茬,從而產(chǎn)生誤分割,如圖4晴天順光、晴天陽光直射、晴天逆光和陰天條件下玉米根茬行分割結(jié)果;當(dāng)行間秸稈量為1、2和3 kg/m2時(shí),除上述原因外,分布在直立根茬附近與其呈現(xiàn)相近顏色的行間秸稈也會(huì)影響直立根茬的識(shí)別,造成誤分割,且行間秸稈覆蓋量越大誤分割現(xiàn)象越嚴(yán)重,如圖5~7晴天順光、晴天陽光直射、晴天逆光和陰天條件下玉米根茬行分割結(jié)果。

遺傳法對(duì)行間秸稈覆蓋量有較好的適應(yīng)性,能夠從不同行間秸稈覆蓋量條件下有效地分割出根茬頂端切口,但其過分割現(xiàn)象嚴(yán)重,即將根茬頂端切口及其以下的直立根茬部分誤分割成背景,如圖4~7晴天順光和晴天陽光直射條件下玉米根茬行分割結(jié)果。此外,光照對(duì)分割結(jié)果有一定影響,相較于晴天順光和晴天陽光直射條件下的分割結(jié)果,晴天逆光和陰天條件下的根茬分割誤分割現(xiàn)象較明顯,如圖4~7晴天逆光和陰天條件下玉米根茬行分割結(jié)果。

與BPNN分割法、ELM分割法和遺傳法相比,本文方法能夠從含有大量行間秸稈、裸露地表等復(fù)雜的環(huán)境下較為準(zhǔn)確地分割出玉米根茬行,如圖4~7晴天順光條件下玉米根茬行分割結(jié)果。同時(shí),本文方法能夠克服行間秸稈覆蓋量變化對(duì)直立根茬識(shí)別及根茬行分割的影響,如圖7晴天順光、晴天陽光直射、晴天逆光和陰天中行間秸稈覆蓋量為3 kg/m2的條件下仍能夠取得良好的分割效果;另外,本文方法還能夠比較有效地克服光照變化對(duì)直立根茬識(shí)別及根茬行分割的影響,在晴天陽光直射、晴天逆光及陰天等光照條件下都能夠取得良好的分割效果,如圖4~6晴天陽光直射、晴天逆光和陰天條件下的玉米根茬行分割結(jié)果。

綜上,從分割效果可看出,本文方法分割效果較好,能夠從復(fù)雜的玉米根茬行圖像中有效分割出玉米根茬行,對(duì)不同行間秸稈覆蓋量、不同光照等條件具有較好的適應(yīng)性。

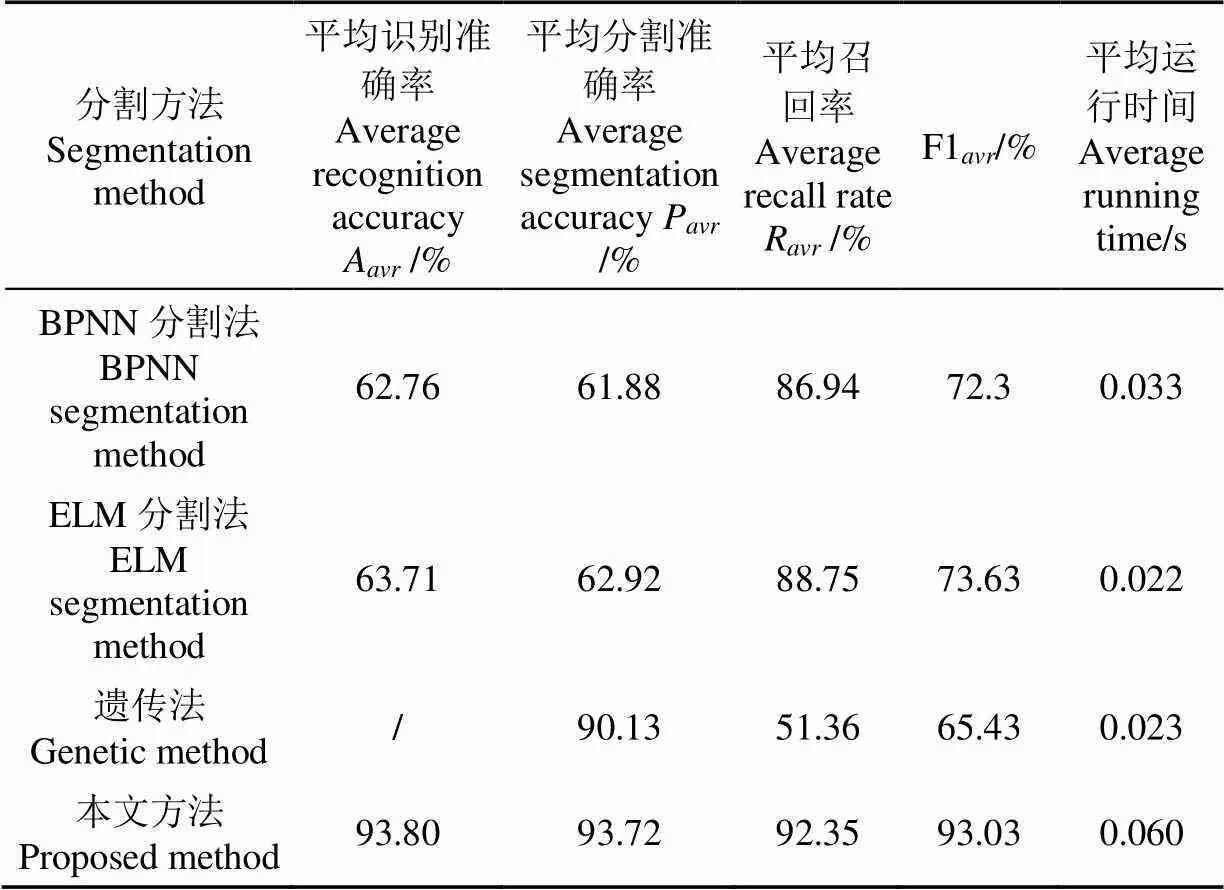

表2為直立根茬BPNN模型、ELM模型及本文模型的平均識(shí)別準(zhǔn)確率A,以及不同方法的平均分割準(zhǔn)確率P、平均召回率R和F1值。由于直立根茬BPNN模型和ELM模型的平均識(shí)別準(zhǔn)確率A較低,分別為62.76%和63.71%,從而導(dǎo)致BPNN分割法和ELM分割法存在嚴(yán)重的誤分割現(xiàn)象,使得分割結(jié)果中背景被分割成根茬切口的像素?cái)?shù)偏多,故二者的平均分割準(zhǔn)確率P較低,分別為61.88%和62.92%,平均召回率R較高,分別為86.94%和88.75%;而遺傳法存在嚴(yán)重的過分割現(xiàn)象,即將直立根茬錯(cuò)分為背景的像素?cái)?shù)過多,其平均分割準(zhǔn)確率(90.13%)較高,而平均召回率(51.36%)較低;本文方法的平均識(shí)別準(zhǔn)確率A、平均分割準(zhǔn)確率P、和平均召回率R在4種方法中都是最高,分別為93.8%,93.72%,92.35%。從整體情況上看,本文方法的F1值最高,為93.03%,BPNN分割法與ELM分割法相近分別為72.3%和73.63%,遺傳法的F1值最低,為65.43%。綜上,本文分割方法的分割性能良好,對(duì)復(fù)雜環(huán)境具有較好的適應(yīng)性。

表2 不同方法分割玉米根茬行圖像的性能指標(biāo)

從時(shí)間指標(biāo)上看,本文方法的平均耗時(shí)為0.06 s,雖然比用時(shí)最短的ELM分割法(0.022 s)增加了0.038 s,但其平均分割準(zhǔn)確率P、平均召回率R、F1值遠(yuǎn)高于另外3種方法,且處理速度在毫秒級(jí),可較好滿足實(shí)時(shí)處理的要求。綜上,從分割性能和運(yùn)行時(shí)間上看,本文方法能夠從復(fù)雜的自然環(huán)境中分割出較完整的玉米根茬行,魯棒性強(qiáng),分割準(zhǔn)確率高,實(shí)時(shí)性好。但同時(shí),從圖4~7晴天逆光條件下的玉米根茬行分割結(jié)果可以看出,本文方法在處理陰天條件下的根茬行圖像時(shí)存在誤分割情況,故仍需進(jìn)一步探索降低陰影干擾方法。

5 結(jié) 論

本文以玉米利用聯(lián)合收獲機(jī)留茬收獲后的直立根茬為研究對(duì)象,提出一種基于支持向量機(jī)(SVM)的玉米根茬行圖像分割方法,該方法分割準(zhǔn)確率高,對(duì)行間秸稈覆蓋量及光照變化具有較好的適應(yīng)性。

1)基于支持向量機(jī)(SVM)的玉米根茬行圖像分割方法采用主成分分析(PCA)優(yōu)選利于區(qū)分目標(biāo)(直立根茬)與背景(行間秸稈及裸露地表)的21個(gè)特征,并將其構(gòu)成特征向量進(jìn)行直立根茬支持向量機(jī)(SVM)識(shí)別模型的訓(xùn)練,利用訓(xùn)練好的直立根茬支持向量機(jī)(SVM)識(shí)別模型在選取的感興趣區(qū)域(ROI)內(nèi)滑動(dòng)檢測(cè)直立根茬,閾值法分割根茬行,形態(tài)學(xué)處理優(yōu)化分割結(jié)果,實(shí)現(xiàn)了復(fù)雜環(huán)境下玉米根茬行的準(zhǔn)確快速分割。

2)分別選取反向傳播神經(jīng)網(wǎng)絡(luò)(BPNN)分割法、極限學(xué)習(xí)機(jī)(ELM)分割法、遺傳法和本文方法對(duì)包含4種行間秸稈覆蓋量和4種光照條件下的測(cè)試樣本圖像進(jìn)行分割。分割結(jié)果表明,本文方法得到的根茬行區(qū)域較完整,與反向傳播神經(jīng)網(wǎng)絡(luò)(BPNN)分割法和極限學(xué)習(xí)機(jī)(ELM)分割法相比,誤分割情況較少,與遺傳法相比,過分割情況較少。

3)選取直立根茬平均識(shí)別準(zhǔn)確率、平均分割準(zhǔn)確率、平均召回率和平均分割準(zhǔn)確率與平均召回率的加權(quán)調(diào)和平均值對(duì)本文所用根茬行分割方法的性能進(jìn)行定量分析。結(jié)果表明,本文方法的分割性能最優(yōu),遠(yuǎn)高于其他3種分割方法,平均識(shí)別準(zhǔn)確率、平均分割準(zhǔn)確率、平均召回率和平均分割準(zhǔn)確率與平均召回率的加權(quán)調(diào)和平均值分別為93.8%,93.72%,92.35%和93.03%。

4)本文方法平均處理時(shí)間為0.06 s,實(shí)時(shí)性良好。從分割效果、直立根茬平均識(shí)別準(zhǔn)確率、平均分割準(zhǔn)確率、平均召回率和平均分割準(zhǔn)確率與平均召回率的加權(quán)調(diào)和平均值等綜合來看,本文方法對(duì)于分割不同秸稈覆蓋量、不同光照等復(fù)雜條件下的玉米根茬行圖像具有良好的效果。

[1] 國(guó)家統(tǒng)計(jì)局. 中國(guó)統(tǒng)計(jì)年鑒[M]. 北京:中國(guó)統(tǒng)計(jì)出版社,2019.

[2] Kassam A, Friedrich T, Derpsch R. Global spread of conservation agriculture[J]. International Journal of Environmental Studies, 2018, 76(1): 29-51.

[3] 何進(jìn),李洪文,陳海濤,等. 保護(hù)性耕作技術(shù)與機(jī)具研究進(jìn)展[J]. 農(nóng)業(yè)機(jī)械學(xué)報(bào),2018,49(4):1-19.

He Jin, Li Hongwen, Chen Haitao, et al. Research progress of conservation tillage technology and machine[J]. Transactions of the Chinese Society for Agricultural Machinery, 2018, 49(4): 1-19. (in Chinese with English abstract)

[4] 王韋韋,朱存璽,陳黎卿,等. 玉米免耕播種機(jī)主動(dòng)式秸稈移位防堵裝置的設(shè)計(jì)與試驗(yàn)[J]. 農(nóng)業(yè)工程學(xué)報(bào),2017,33(24):10-17.

Wang Weiwei, Zhu Cunxi, Chen Liqing, et al. Design and experiment of active straw-removing anti-blocking device for maize no-tillage planter[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(24): 10-17. (in Chinese with English abstract)

[5] 羅征. 原茬地12行小麥免耕播種機(jī)關(guān)鍵技術(shù)研究[D]. 哈爾濱:東北農(nóng)業(yè)大學(xué),2016.

Luo Zheng. Study on Key Technology of 12 Rows Wheat No-Tillage Planter in Original Stubble Fields[D]. Haerbin: Northeast Agricultural University, 2016. (in Chinese with English abstract)

[6] 王春雷,李洪文,何進(jìn),等. 自動(dòng)導(dǎo)航與測(cè)控技術(shù)在保護(hù)性耕作中的應(yīng)用現(xiàn)狀和展望[J]. 智慧農(nóng)業(yè),2020,2(4):41-55.

Wang Chunlei, Li Hongwen, He Jin, et al. State-of-the-art and prospect of automatic navigation and measurement techniques application in conservation tillage[J]. Smart Agriculture, 2020, 2(4): 41-55. (in Chinese with English abstract)

[7] 陳媛. 基于機(jī)器視覺的秸稈行茬導(dǎo)航路徑的檢測(cè)研究[D]. 北京:中國(guó)農(nóng)業(yè)大學(xué),2008.

Chen Yuan. Detection of Stubble Row for Machine Vision Guidance in No-Till Field[D]. Beijing: China Agricultural University, 2008. (in Chinese with English abstract)

[8] 陳婉芝. 基于機(jī)器視覺的免耕播種機(jī)對(duì)行避茬技術(shù)研究[D]. 北京:中國(guó)農(nóng)業(yè)大學(xué),2018.

Chen Wanzhi. Study on Maize Stubble Avoidance Technology Based on Machine Vision for Row-Follow No-Till Seeder[D]. Beijing: China Agricultural University, 2018. (in Chinese with English abstract)

[9] 楊信廷,劉蒙蒙,許建平,等. 自動(dòng)監(jiān)測(cè)裝置用溫室粉虱和薊馬成蟲圖像分割識(shí)別算法[J]. 農(nóng)業(yè)工程學(xué)報(bào),2018,34(1):164-170.

Yang Xinting, Liu Mengmeng, Xu Jianping, et al. Image segmentation and recognition algorithm of greenhouse whitefly and thrip adults for automatic monitoring device[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(1): 164-170. (in Chinese with English abstract)

[10] 孟慶寬. 基于機(jī)器視覺的農(nóng)業(yè)車輛—農(nóng)具組合導(dǎo)航系統(tǒng)路徑識(shí)別及控制方法研究[D]. 北京:中國(guó)農(nóng)業(yè)大學(xué),2014.

Meng Qingkuan. Methods of Navigation Control and Path Recognition in the Integrated Guidance System of Agricultural Vehicle and Implement Based on Machine Vision[D]. Beijing: China Agricultural University, 2014. (in Chinese with English abstract)

[11] 張建華,冀榮華,袁雪,等. 基于徑向基支持向量機(jī)的棉花蟲害識(shí)別[J]. 農(nóng)業(yè)機(jī)械學(xué)報(bào),2011,42(8):178-183.

Zhang Jianhua, Ji Ronghua, Yuan Xue, et al. Recognition of pest damage for cotton leaf based on rbf-svm algorithm[J]. Transactions of the Chinese Society for Agricultural Machinery, 2011, 42(8): 178-183. (in Chinese with English abstract)

[12] Baron J, Hill D J. Monitoring grassland invasion by spotted knapweed (centaurea maculosa) with rpas-acquired multispectral imagery[J]. Remote Sensing of Environment, 2020, 249: 112008.

[13] Jia F, Li S, Zhang T. Detection of cervical cancer cells based on strong feature CNN-SVM network[J]. Neurocomputing, 2020, 411: 112-127.

[14] 陳彩文,杜永貴,周超,等. 基于圖像紋理特征的養(yǎng)殖魚群攝食活動(dòng)強(qiáng)度評(píng)估[J]. 農(nóng)業(yè)工程學(xué)報(bào),2017,33(5):232-237.

Chen Caiwen, Du Yonggui, Zhou Chao, et al. Evaluation of feeding activity of shoal based on image texture[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(5): 232-237. (in Chinese with English abstract)

[15] 楊瑋,蘭紅,李民贊,等. 基于圖像處理和GBRT模型的表土層土壤容重預(yù)測(cè)[J]. 農(nóng)業(yè)機(jī)械學(xué)報(bào),2020,51(9):193-200.

Yang Wei, Lan Hong, Li Minzan, et al. Prediction of top soil layer bulk density based on image processing and gradient boosting regression tree model[J]. Transactions of the Chinese Society for Agricultural Machinery, 2020, 51(9): 193-200. (in Chinese with English abstract)

[16] Drewry J L, Luck B D, Willett R M, et al. Predicting kernel processing score of harvested and processed corn silage via image processing techniques[J]. Computers and Electronics in Agriculture, 2019, 160: 144-152.

[17] Nouri-Ahmadabadi H, Omid M, Mohtasebi S S, et al. Design, development and evaluation of an online grading system for peeled pistachios equipped with machine vision technology and support vector machine[J]. Information Processing in Agriculture, 2017, 4( 4): 333-341.

[18] Xu Z, Diao S, Teng J, et al. Breed identification of meat using machine learning and breed tag SNPs[J]. Food Control, 2021, 125(7): 107971.

[19] Sengupta Subhajit, Lee W S. Identification and determination of the number of immature green citrus fruit in a canopy under different ambient light conditions[J]. Biosystems Engineering, 2014, 117: 51-61.

[20] Jiang B, Ping W, Zhuang S, et al. Detection of maize drought based on texture and morphological features[J]. Computers and Electronics in Agriculture, 2018, 151: 50-60.

[21] 蔡道清,李彥明,覃程錦,等. 水田田埂邊界支持向量機(jī)檢測(cè)方法[J]. 農(nóng)業(yè)機(jī)械學(xué)報(bào),2019,50(6):22-27,109.

Cai Daoqing, Li Yanming, Qin Chengjin, et al. Detection method of boundary of paddy fields using support vector machine[J]. Transactions of the Chinese Society for Agricultural Machinery, 2019, 50(6): 22-27, 109. (in Chinese with English abstract)

[22] Massah J, Vakilian K A, Shabanian M, et al. Design, development, and performance evaluation of a robot for yield estimation of kiwifruit[J]. Computers and Electronics in Agriculture, 2021, 185: 106132.

[23] 李佳,呂程序,苑嚴(yán)偉,等. 快速傅里葉變換結(jié)合SVM算法識(shí)別地表玉米秸稈覆蓋率[J]. 農(nóng)業(yè)工程學(xué)報(bào),2019,35(20):194-201.

Li Jia, Lyv Chengxu, Yuan Yanwei, et al. Automatic recognition of corn straw coverage based on fast Fourier transform and SVM[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(20): 194-201. (in Chinese with English abstract)

[24] 陳林濤,馬旭,曹秀龍,等. 基于主成分分析的雜交稻芽種物理特性評(píng)價(jià)研究[J]. 農(nóng)業(yè)工程學(xué)報(bào),2019,35(16):334-342.

Chen Lintao, Ma Xu, Cao Xiulong, et al. Evaluation research of physical characteristics of hybrid rice buds based on principal component analysis[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(16): 334-342. (in Chinese with English abstract)

[25] 陳英義,程倩倩,方曉敏,等. 主成分分析和長(zhǎng)短時(shí)記憶神經(jīng)網(wǎng)絡(luò)預(yù)測(cè)水產(chǎn)養(yǎng)殖水體溶解氧[J]. 農(nóng)業(yè)工程學(xué)報(bào),2018,34(17):183-191.

Chen Yingyi, Cheng Qianqian, Fang Xiaomin, et al. Principal component analysis and long short-term memory neural network for predicting dissolved oxygen in water for aquaculture[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(17): 183-191. (in Chinese with English abstract)

[26] Hong G, El-Hamid H. Hyperspectral imaging using multivariate analysis for simulation and prediction of agricultural crops in Ningxia, China[J]. Computers and Electronics in Agriculture, 2020, 172: 105355.

[27] Kumar S, Dhakshina, Esakkirajan S, Bama S, et al. A microcontroller based machine vision approach for tomato grading and sorting using SVM classifier[J]. Microprocessors and Microsystems, 2020, 76: 103090.

[28] 梁習(xí)卉子,陳兵旗,李民贊,等. 基于HOG特征和SVM的棉花行數(shù)動(dòng)態(tài)計(jì)數(shù)方法[J]. 農(nóng)業(yè)工程學(xué)報(bào),2020,36(15):173-181.

Liang Xihuizi, Chen Bingqi, Li Minzan, et al. Method for dynamic counting of cotton rows based on hog feature and SVM[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(15): 173-181. (in Chinese with English abstract)

[29] Konstantinos L, Patrizia B, Dimitrios M, et al. Machine learning in agriculture: A review[J]. Sensors, 2018, 18(8): 2674.

[30] Azarmdel Hossein, Jahanbakhshi Ahmad, Mohtasebi Seyed Saeid, et al. Evaluation of image processing technique as an expert system in mulberry fruit grading based on ripeness level using artificial neural networks (ANNs) and support vector machine (SVM)[J]. Postharvest Biology and Technology, 2020, 166: 111201.

[31] 張建華,孔繁濤,李哲敏,等. 基于最優(yōu)二叉樹支持向量機(jī)的蜜柚葉部病害識(shí)別[J]. 農(nóng)業(yè)工程學(xué)報(bào),2014,30(19):222-231.

Zhang Jianhua, Kong Fantao, Li Zhemin, et al. Recognition of honey pomelo leaf diseases based on optimal binary tree support vector machine[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2014, 30(19): 222-231. (in Chinese with English abstract)

[32] 王見,周勤,尹愛軍. 改進(jìn)Otsu 算法與ELM 融合的自然場(chǎng)景棉桃自適應(yīng)分割方法[J]. 農(nóng)業(yè)工程學(xué)報(bào),2018,34(14):173-180.

Wang Jian, Zhou Qin, Yin Aijun. Self-adaptive segmentation method of cotton in natural scene by combining improved Otsu with ELM algorithm[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(14): 173-180. (in Chinese with English abstract)

[33] Xu Weiyue, Chen Huan, Su Qiong, et al. Shadow detection and removal in apple image segmentation under natural light conditions using an ultrametric contour map[J]. Biosystems Engineering, 2019, 184: 142-154.

[34] 陳善雄,伍勝,于顯平,等. 基于卷積神經(jīng)網(wǎng)絡(luò)結(jié)合圖像處理技術(shù)的蕎麥病害識(shí)別[J]. 農(nóng)業(yè)工程學(xué)報(bào),2021,37(3):155-163.

Chen Shanxiong, Wu Sheng, Yu Xianping, et al. Buckwheat disease recognition using convolution neural network combined with image processing[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(3): 155-163. (in Chinese with English abstract)

Image segmentation of maize stubble row based on SVM

Wang Chunlei, Lu Caiyun, Li Hongwen※, He Jin, Wang Qingjie, Jiang Shan

(1.,,100083,; 2.(),,100083,)

Accurate identification of maize stubble row has widely dominated the automatic row-followed seeding using machine vision. However, it is difficult to segment the images of stubble row in the maize stubble field harvested by combine harvesters, due mainly to the indistinct chromaticity difference with naked land surface and maize residues. In this study, image segmentation was presented using a support vector machine (SVM), in order to realize precise and rapid segmentation of the maize stubble row. Firstly, principal component analysis (PCA) was used for dimensionality reduction and feature optimization of the dataset, where the specific features were selected to distinguish standing stubble, naked land surface, and maize residues. Especially, the 1 500 sample images of standing stubble, 1500 sample images of the naked land surface, and 1 500 sample images of maize residues were collected, while, 2 210 features containing 697 color features, and 1 513 texture features were obtained using sample images. Then, PCA was used to choose 21 color features of the standing stubble, naked land surface, and maize residues in the R, G, B, L, a, b, v, YIQ-V and YCbCr-Y components from the datasets. The selected color features were constructed into a 21-dimensional feature vector, which was used as the input of the standing stubble SVM recognition model. Secondly, the region of interest (ROI) was selected in the middle of the image with the integrated maize stubble row for the higher efficiency of image segmentation. Finally, the trained SVM recognition model was used for the slide detection of standing stubble within the ROI with a window of 25×25(pixel). If the currently detected window was standing stubble in slide detection, the grayscale value would be set to 255. The maize stubble row was segmented by a threshold when the slide detection was complete. The segmented binary image was then optimized using the morphological open operation processing with a disc-shaped structural element with a radius of 2 pixels. Furthermore, 100 test images were collected to verify the segmentation performance from the Scientific Observing and Experimental Station of Arable Land Conservation (North Hebei), Ministry of Agriculture and Rural Affairs in Zhuozhou City, China in October 2019. The capture size was divided into 4 classes, including 0, 1, 2, and 3 kg/m2, according to the quality of maize residues between rows. At the same time, each class included the front lighting on a sunny day, direct sunlight, backlight on a sunny and cloudy day. Moreover, the images of the 0 kg/m2class also involved different shapes and surface moisture contents, due to the change of time and weather. The results revealed that the algorithm presented higher accuracy and robustness for the stubble row segmentation under various maize residues quality between rows and different lighting conditions. The average recognition accuracy of standing stubble was 93.8% in the SVM recognition model, whereas, those were 62.76% and 63.71% in the BPNN and ELM model, respectively. The average segmentation accuracy, average recall rate, and F1in the SVM recognition model were 93.72%,92.35% and 93.03%, respectively, whereas, those in the BPNN, ELM and genetic models were 61.88%, 86.94%, 72.3%, 62.92%, 88.75%, 73.63%, 90.13%, 51.36% and 65.43%, respectively. Additionally, the average processing time was 0.06 s for a 640×480(pixel) image using the SVM recognition models, indicating excellent real-time performance. Therefore, the SVM recognition model can widely be expected to realize better performance than others in the segmentation of the maize stubble row after the maize is harvested by the combine harvesters.

machine vision; image segmentation; support vector machine(SVM); principal component analysis(PCA); maize stubble row

王春雷,盧彩云,李洪文,等. 基于支持向量機(jī)的玉米根茬行圖像分割[J]. 農(nóng)業(yè)工程學(xué)報(bào),2021,37(16):117-126.doi:10.11975/j.issn.1002-6819.2021.16.015 http://www.tcsae.org

Wang Chunlei, Lu Caiyun, Li Hongwen, et al. Image segmentation of maize stubble row based on SVM[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(16): 117-126. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2021.16.015 http://www.tcsae.org

2021-05-14

2021-07-21

現(xiàn)代農(nóng)業(yè)產(chǎn)業(yè)技術(shù)體系建設(shè)項(xiàng)目(CARS03);中國(guó)農(nóng)業(yè)大學(xué)2115人才工程資助項(xiàng)目

王春雷,博士生,研究方向?yàn)楝F(xiàn)代農(nóng)業(yè)裝備與計(jì)算機(jī)測(cè)控技術(shù)。Email:chlwang@cau.edu.cn

李洪文,教授,研究方向?yàn)楝F(xiàn)代農(nóng)業(yè)裝備與計(jì)算機(jī)測(cè)控技術(shù)。Email:lhwen@cau.edu.cn

10.11975/j.issn.1002-6819.2021.16.015

TP391.4

A

1002-6819(2021)-16-0117-10