基于機載三維激光掃描的大豆冠層幾何參數提取

管賢平,劉 寬,邱白晶,董曉婭,薛新宇

基于機載三維激光掃描的大豆冠層幾何參數提取

管賢平1,劉 寬1,邱白晶1※,董曉婭1,薛新宇2

(1. 江蘇大學農業農村部植保工程重點實驗室,鎮江 212013; 2. 農業農村部南京農業機械化研究所,南京 210014)

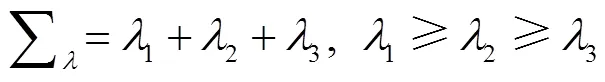

為了實現大田大豆單株植株幾何參數(高度、體積)準確獲取,該文構建了基于機載激光雷達(LiDAR)的農作物表型探測系統,并開展了大田標定和探測試驗。針對大田大豆壟上種植模式下地面平整度差異大、植株枝葉交接難以區分的問題,提出了一種LiDAR表型探測系統下的基于局部鄰域特征分割與均值漂移算法的提取方法。在獲取的點云中,首先使用基于局部鄰域特征的語義分割方法提取一壟植株行,然后采用均值漂移算法提取單株植株,最后進行植株表面重建和植株幾何參數統計。LiDAR表型探測系統在沿探測系統前進運動方向、垂直運動方向、垂直地面方向最大誤差分別為0.58%(5.8 cm)、?1.75%(?7.0 cm)、?1.74%(?3.4 cm)。該文采用的基于局部鄰域特征的分割方法,植株與地面分類的效果良好,人工統計植株數量相比,檢測植株數量的平均相對誤差為11.83%。相對于常用的RANSAC(random sample consensus)方法,使用該文提出的高度計算方法,大豆植株高度平均相對誤差從9.05%下降到5.14%,利用alpha-shape算法重建后的冠層體積平均值為48.5 dm3。該文工作可為作物植株分割和體積統計提供借鑒。

激光;提取;數據處理;單株點云;幾何參數

0 引 言

農田信息獲取與分析是精準農業實施的前提[1]。作物形貌、位置信息是精確噴霧技術的基礎[2],探測作物幾何信息是對靶變量噴霧的重要研究內容[3]。可實現探測作物形貌信息的技術手段越來越多[4],應用于靶標作物形貌探測的技術手段主要有超聲波探測、紅外探測、可見光成像與圖像處理、激光雷達(LiDAR)等。超聲波探測在獲取柑橘冠層形貌信息、計算冠層體積[5],估算果園靶標冠層密度[6]等方面得到了應用,Maghsoudi等[7]利用神經網絡算法對超聲波數據進行學習,實現了果樹體積的可靠估計。超聲波傳感器成本較低,但存在靶向性低、對距離敏感、采樣頻率低等問題。紅外探測可通過確定靶標作物的有無[8]提升變量噴霧機效果,也可與其他傳感器數據結合,如顏色傳感器[9]實現只對綠色植物噴霧。但紅外探測受環境光干擾,穩定性不足,田間應用限制較多。可見光成像技術也被用在作物表型和參數測量中。如通過分析圖像獲取植株形態或表面模型信息可以優化移栽機結構與移栽時間[10]、闡明作物與環境的協同關系[11]、估算大麥生物量[12]等。但可見光成像技術對光照條件要求高,不能滿足實際生產要求[13]。

激光雷達具有精度高、靶向性強、響應時間短、受光照條件影響小等特點[14],被廣泛應用在樹冠參數的獲取[15-16]、果樹生物量與產量的預測[17-19]、實時修正的靶標作物表型[20]等方面。二維激光掃描儀與亞米級全球導航衛星系統GNSS(global navigation satellite system)結合,可以獲取高精度的田間作業地理信息,提升施藥、灌溉、田間信息管理水平。Garrido等[21]實現了溫室發育初期玉米作物形態結構重建。Sun等[22]將二維激光掃描儀與高精度(1 cm)RTK-GPS組成高通量信息獲取系統實現了對棉花高度的可靠估計。Escola等[23]將實時差分GNSS與二維激光掃描儀UTM30-LX-EW數據融合得到了橄欖樹冠層幾何參數和結構。程曼等[24]針對花生田間特殊工作環境,設計了以二維激光掃描儀為核心的數據獲取系統,通過對冠層剖面點云曲線擬合獲取邊界,進而獲取花生高度,提高了高度獲取效率。三維LiDAR具有更高數據密度獲取能力,常被用于田間地理信息獲取以估算靶標作物生物量。Martin等[25]將三維激光掃描儀數據融合GNSS與慣性測量單元(inertial measurement unit,IMU)繪制了小麥地圖并估算了高度與體積。Ravi等[26]基于無人機與三維LiDAR構建的高通量探測系統,用于檢測作物高度與冠層覆蓋率的變化,繪制作物地圖。

已有基于點云的獲取作物高度與體積的文獻中主要集中在不區分單株作物且植株無枝葉交接或地面相對平整的條件,但對于地面平整度差異大、枝葉交接較多條件下提取單株植株的文獻較少。為此本文提出一種利用局部鄰域特征分割與均值漂移算法的點云分割提取方法,進行大豆作物的植株與地面分割及單株提取;采用alpha-shape算法進行作物三維重建和幾何參數計算。

1 材料與方法

1.1 數據獲取

1.1.1 數據獲取系統組成

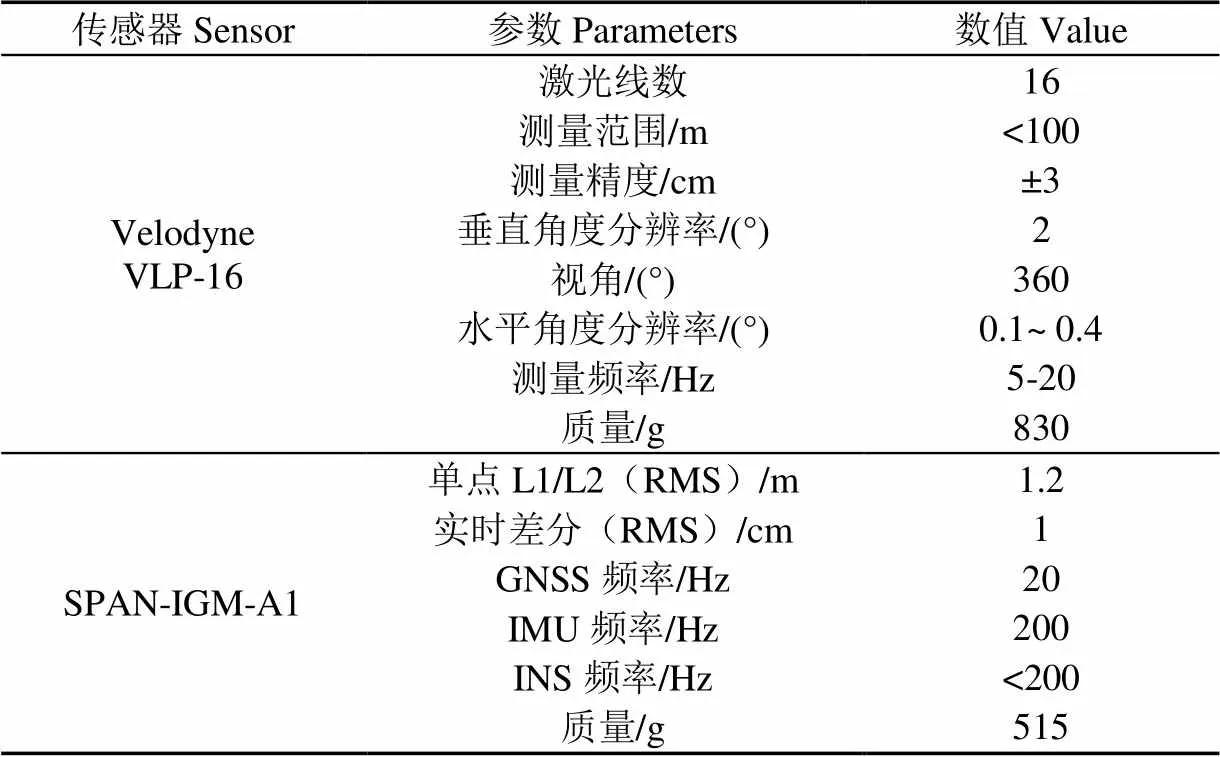

三維激光雷達農作物表型探測系統(簡稱探測系統)主要由移動測量端、基準站和PC端組成。移動測量端包括激光掃描儀LASER和位姿測量單元,其中激光掃描儀采用美國Velodyne公司的VLP-16型三維激光掃描儀,位姿測量單元包括NovAtel公司的SPAN-IGM-A1型組合導航系統、GPS1000型天線、存儲控制器、電臺等。移動測量端主要部件參數如表1所示;基準站由北斗星通公司的C280-AT型接收機、數據記錄儀、GPS1000型天線、電源等組成;PC端可通過電臺實現對移動測量端的遠程控制。為高效獲取靶標作物信息,移動測量端搭載在大疆M600pro無人機上。為了得到田間地理環境三維點云數據,需將激光掃描儀獲得的三維點云數據PCAP文件與通過后差分技術得到的位姿數據POS文件進行數據融合。但基準站與移動測量端GNSS接收機采集的原始數據需解算后才能進行GNSS與慣導系統(inertial navigation system,INS)數據的耦合。

表1 VLP-16和SPAN-IGM-A1參數表

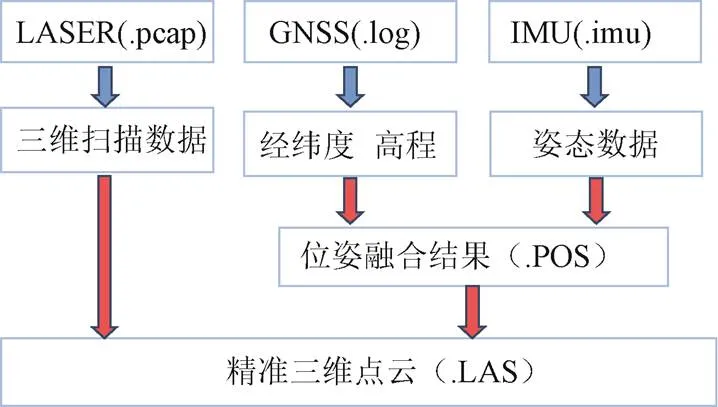

使用NovAtel公司的位姿解算軟件Inertial Explorer 8.70完成數據的解算與耦合,解算后可得到GNSS數據、IMU數據、航向數據等,通過GNSS與IMU數據緊耦合得到激光掃描儀的位姿數據POS文件。使用北京北斗星通公司的點云數據處理軟件Li-Acquire完成PCAP文件與POS文件的數據集成生成標準的LAS格式點云文件。數據集成流程圖如圖1所示。

圖1 數據集成流程圖

1.1.2 試驗環境與方案

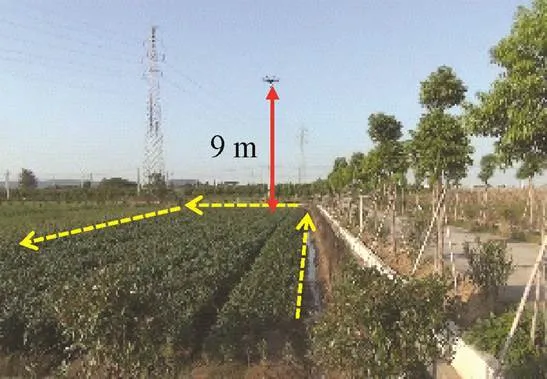

田間試驗于2017年8月至9月在農業農村部南京農業機械化研究所白馬教學科研基地(31.62°N,119.18°E)育種試驗田進行,如圖2所示。試驗田尺寸為長度41 m,寬度18 m,共7壟,壟間有凹溝,每壟種植5行作物,株距0.45 m,行距0.55 m。

靶標作物為生長了55~60 d處于結莢期階段的大豆。數據采集過程中,最大環境風速為1.5 m/s,無人機路徑規劃在飛控軟件中進行設置。設置帶寬為18 m,帶寬重疊率為40%,無人機的水平前進速度不超過0.5 m/s,激光雷達距離地面平均高度為9.0 m,選擇測距范圍為2~20 m的點云數據用于分析,在18 m掃描帶寬范圍內,總體上掃描點密度約為1 600點/m2。

圖2 田間試驗區與掃描路徑示意圖

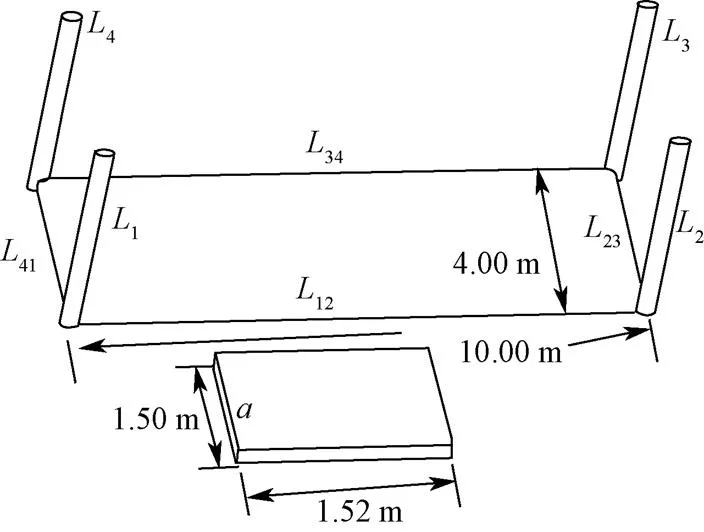

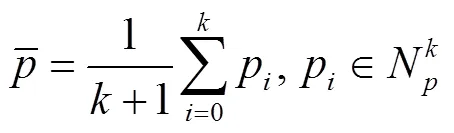

手動測量試驗田一壟中6株大豆作物高度與體積,并與系統測量值對比。在進行田間試驗同時,設置了大田數據精度驗證試驗方案。在試驗田旁放置1塊長方形板材和4根標桿,布置方式及尺寸如圖3所示。

注:L1、L2、L3、L4分別為4根標桿長度,cm;L12、L23、L34、L41分別為標桿之間的距離,m;a和b分別為長方形板材寬度和長度,cm。

通過點云中12,23,34,41的測量值與手工測量值的對比,驗證試驗過程中系統的測量精度。其中點云測量值的獲取方法是使用LiDAR360軟件,手工測量數據通過卷尺測量,均測量3次并求平均值。

1.2 數據處理方法

數據處理的目標是提取LAS文件中單株作物的三維點云,并完成靶標作物的幾何參數提取和三維建模。該目標分為點云數據預處理、分割地面與植株點云、獲取單株大豆植株及計算單株作物冠層高度與體積。

1.2.1 點云數據預處理

由于采集的田間地理點云包含大量的非靶標作物信息和離群點,導致處理時間和難度增加。本文通過設置感興趣區域ROI(region of interest)和基于鄰域平均距離的方法[27]完成點云去噪。

1)選取ROI點云。探測系統獲取的點云數據,其坐標系和田塊壟方向不一致,且平面坐標數值較大,為此根據田塊中心和壟走向,建立田塊坐標系,將原始點云數據轉換到田塊坐標系下。通過設置田塊坐標系下各坐標軸的上下界,選取界內點云數據得到ROI點云。

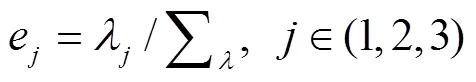

1.2.2 地面分割與作物點云提取

為了提取作物幾何參數,通常采用設置分割閾值[22]或RANSAC(random sample consensus)算法[33]。但對于壟上種植模式,地面平整度差異大,設置統一閾值或使用RANSAC算法誤差較大。本文提出使用基于局部鄰域特征語義分割的算法,將靶標作物點云與地面點云分離。基于局部鄰域特征的語義分割算法主要分為3步:

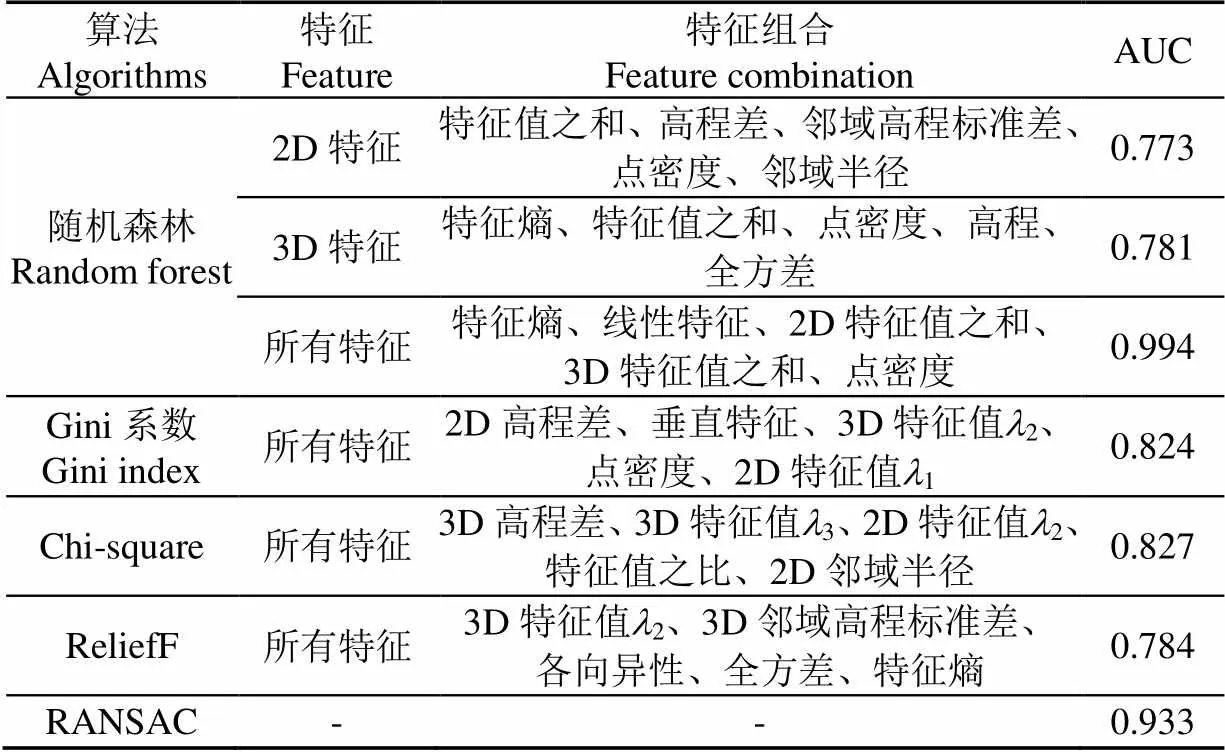

3)監督分類及評價指標。根據不同特征組合對點云分類,選取隨機森林分類器對測試集數據分類標簽進行預測[31]。本文選擇ROC曲線的AUC值作為分類效果的評價指標。ROC的縱軸表示真正類比率,橫軸表示負正類比率,AUC值為ROC曲線下的面積,數值越接近1代表分類器分類效果越好。并將分類效果與RANSAC算法的分類效果進行對比。

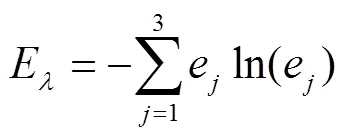

1.2.3 獲取單株大豆植株

1.2.4 計算大豆冠層高度與體積

1)冠層高度定義與測量

本文將大豆實際株高定義為莖稈與地面交點avg至最高葉片max的距離,如圖4a所示。由于激光束通過密集枝葉到達地面的概率較小,造成avg處采樣頻率低,本文通過avg點周圍的地形估計其真值。

注:和¢分別為植株株高的實際測量值和系統測量值,cm;為植株點云的重心。

Note:and¢are the values of manual measurement and system measurement of plant height respectively, cm;is the gravity center of point cloud of plant.

圖4 高度測量示意圖

Fig.4 Schematic diagram of height measurement

2)冠層體積定義與測量

植株的冠層體積不包含其中的間隙,但VLP-16型激光掃描儀精度為±3 cm,難以準確探測作物葉片厚度、枝葉寬度等參數,并且由于枝葉阻擋,下層枝葉外形難以準確完整獲取,無法準確獲得嚴格意義上的冠層體積。為此本文將作物體積看為由點云邊界界定的3D實體體積。三維點云是對作物體積的離散化表示,恢復作物的體積需對點云進行三維重建。本文使用alpha shape算法對作物進行三維重建,alpha shape算法是Delaunay三角剖分算法的1種擴展形式,可從散亂空間點集中求得點云輪廓[32]。

2 結果與分析

2.1 精度驗證結果

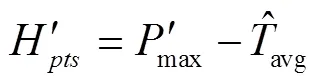

系統測量的長方形板材和標桿的幾何尺寸如表2所示。因部分長方形板材被作物遮擋,故只列出了邊長數據,表2顯示了長方形板材與標桿的系統測量值與手工測量值的對比。其中沿作物行方向即掃描前進方向(12、34)上尺寸為1 000 cm時,最大相對誤差為0.58%,誤差值為5.8 cm;在垂直于作物行方向(23、41)上尺寸為400 cm時,最大相對誤差?1.75%,誤差值為?7.0 cm;在垂直于地面方向上,尺寸為195 cm時,最大的相對誤差為?1.74%,誤差值為?3.4 cm。所以大田試驗精度可以達到10 cm以內,空間精度關系為前進方向精度最高,垂直于地面方向與垂直于作物行方向精度接近。部分相對誤差較小,一方面可能單次測量存在誤差較小的情況,另一方面可能激光掃描儀在較小測距范圍(<20 m)的精度較高[35]。

表2 長方形板材與標桿的手工測量值與系統測量值對比

2.2 提取地面結果

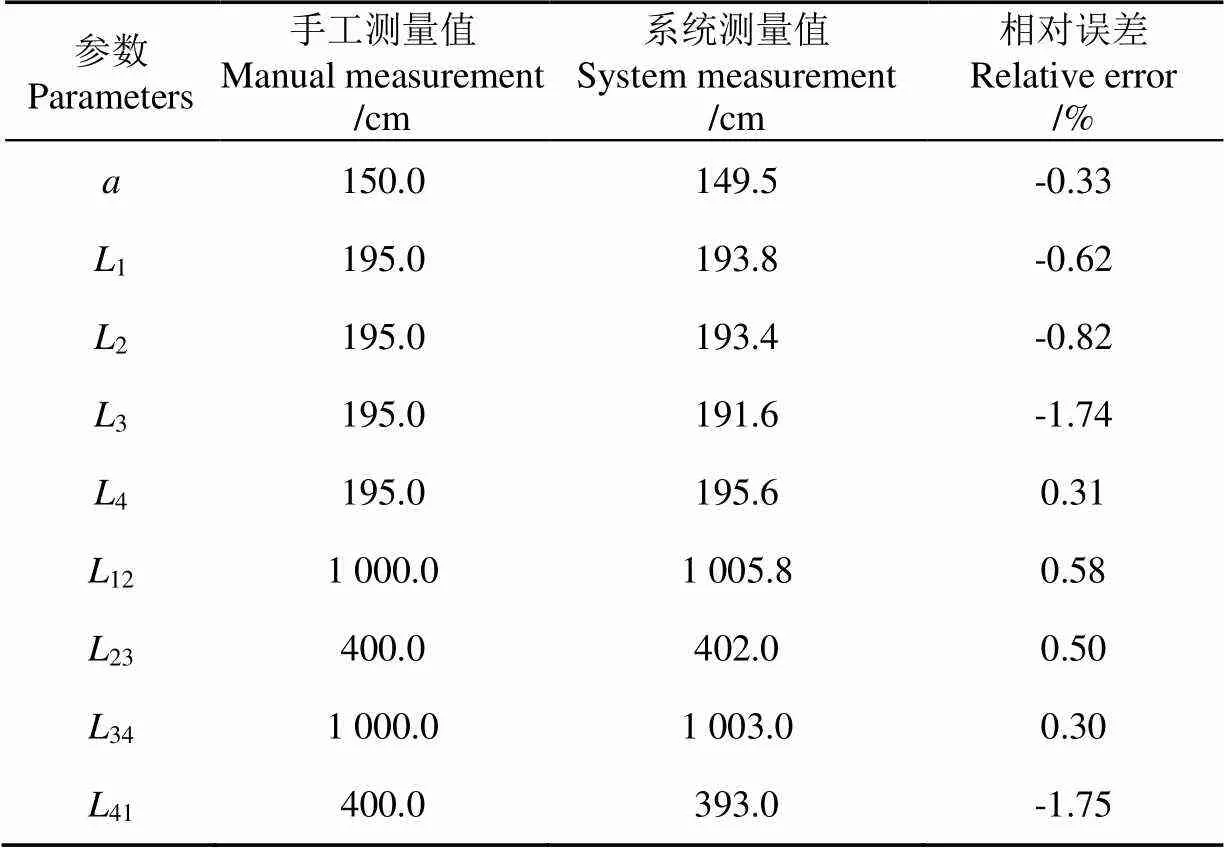

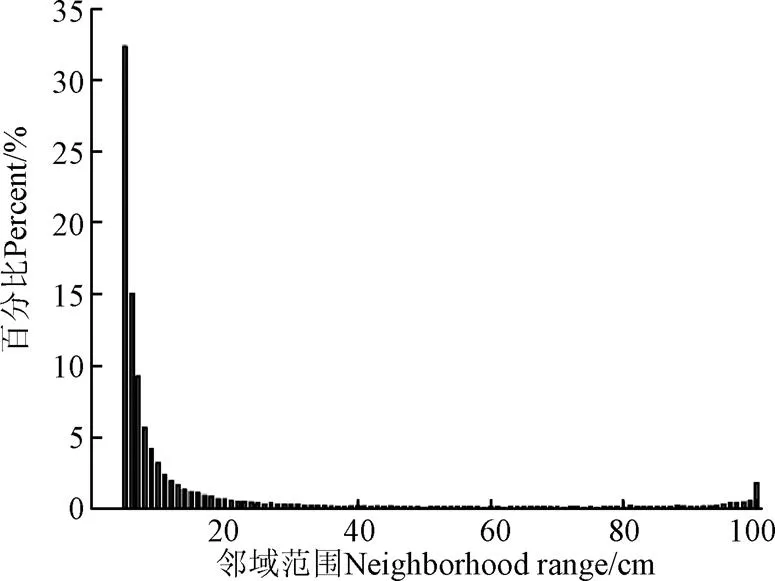

最優鄰域初始范圍設置為5~100個點。計算的最優鄰域結果如圖5所示。由圖可知,97%的點最優鄰域包含點數量小于20個。使用3D點云標注工具對點云文件進行手工標注,建立標簽數據集,以衡量算法對地面檢測分割的效果,標注后的訓練集如圖6所示,其中亮色表示作物。

圖5 鄰域分布柱狀圖

圖6 訓練集標記結果

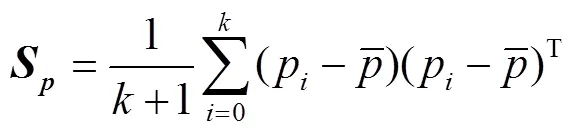

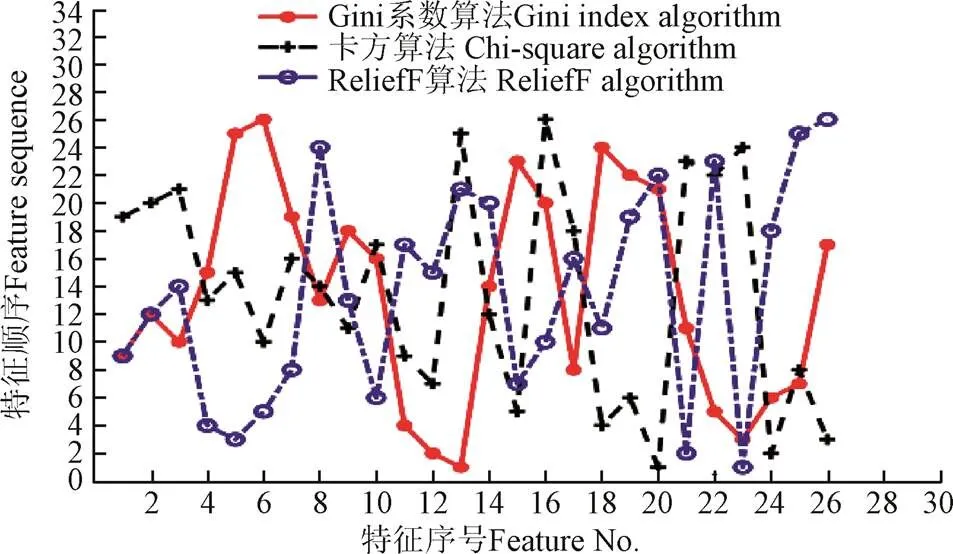

本研究應用Gini系數算法、Chi-square特征算法和ReliefF算法等常用算法對26個特征進行評估,結果如圖7所示。特征順序值越小表示對應特征對點云分類效果越好,各算法選擇分類效果最好的前5個特征作為分類特征組合。

圖7 特征選擇結果

在以上3種算法的特征組合分類的基礎上,本研究同時引入隨機森林方法,針對2D特征、3D特征及所有特征進行選擇,同樣選取分類效果最好的前5個特征作為特征組合。不同特征組合下的分類的效果及其與RANSAC分類算法效果對比如表3所示。在隨機森林算法基于所有特征確定的特征組合進行分類時,分類效果的評價指標AUC值最大,為0.994,相比RANSAC算法本文方法不需人工調節參數,利于自動處理點云數據。

2.3 獲取大豆單株植株結果

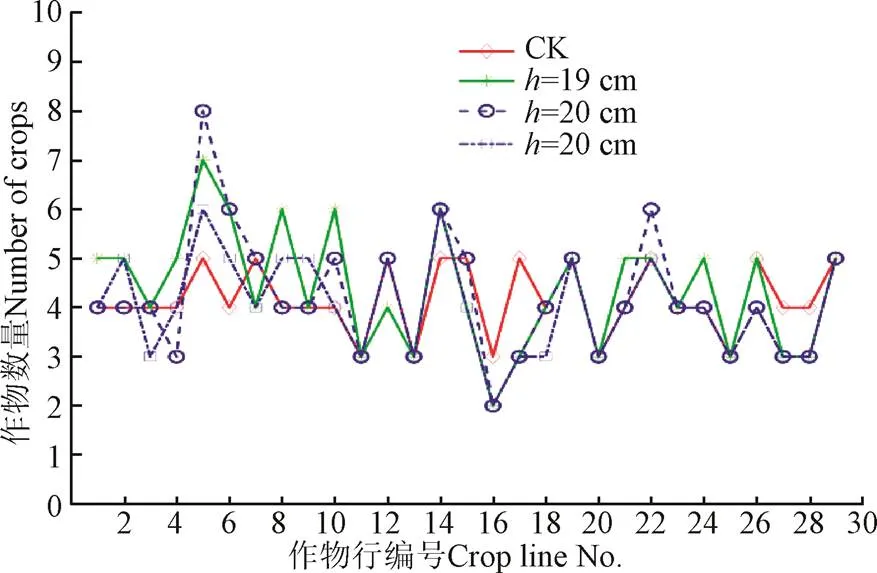

均值漂移算法需要設置的參數為帶寬,因大豆作物播種時按照一定的株距與行距,故在設置帶寬時考慮這些先驗知識。本文大田大豆的行距為0.55 m,株距為0.45 m,設置了帶寬取值區間為19~21 cm的3個梯度帶寬以檢測帶寬與先驗知識的關系。測試數據集中實際采集了121株大豆植株,每個帶寬與植株數量對應關系如圖8所示。分別計算各作物行檢測植株數量與人工統計植株數量相對誤差,分析可知,當=20 cm時,檢測植株數量平均相對誤差為11.83%,且分布相關性最高,為0.675。考慮到作物生長的差異性與農藝水平等因素的影響,可以猜測均值漂移用于分割單株點云的最佳分割帶寬opt可在行距或株距最小值的一半附近得到。本文中最優分割帶寬與猜想理論帶寬誤差為2.5 cm。

表3 不同算法分類結果對比

注:CK為人工統計;h為帶寬。

2.4 大豆冠層高度與體積計算結果

2.4.1 植株高度結果

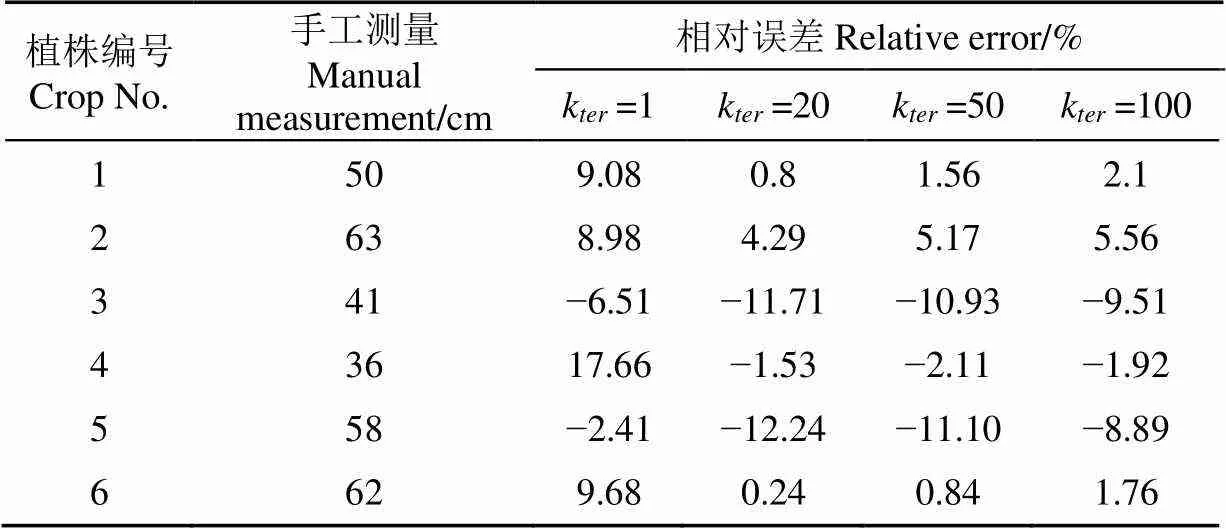

植株高度測量誤差與重心投影點鄰域范圍的關系,如表4所示。表4分析了6株大豆植株系統測量高度與重心投影點鄰域范圍取值的關系。可以看出直接采用重心投影點(k=1時)時,植株高度最大相對誤差出現在高度為36 cm的植株上,相對誤差為17.66%,總體平均相對誤差為9.05%。當采用鄰近的方法計算植株高度時,k=20時,系統獲得的相對誤差有4株都顯著下降,平均相對誤差下降到5.14%;k=100時,平均相對誤差下降到4.96%。雖然k=100時平均相對誤差略小于k=20時,考慮到植株點云的規模較小,選擇k=20作為計算植株高度時的投影點鄰域范圍。

表4 kter取值與相對誤差關系

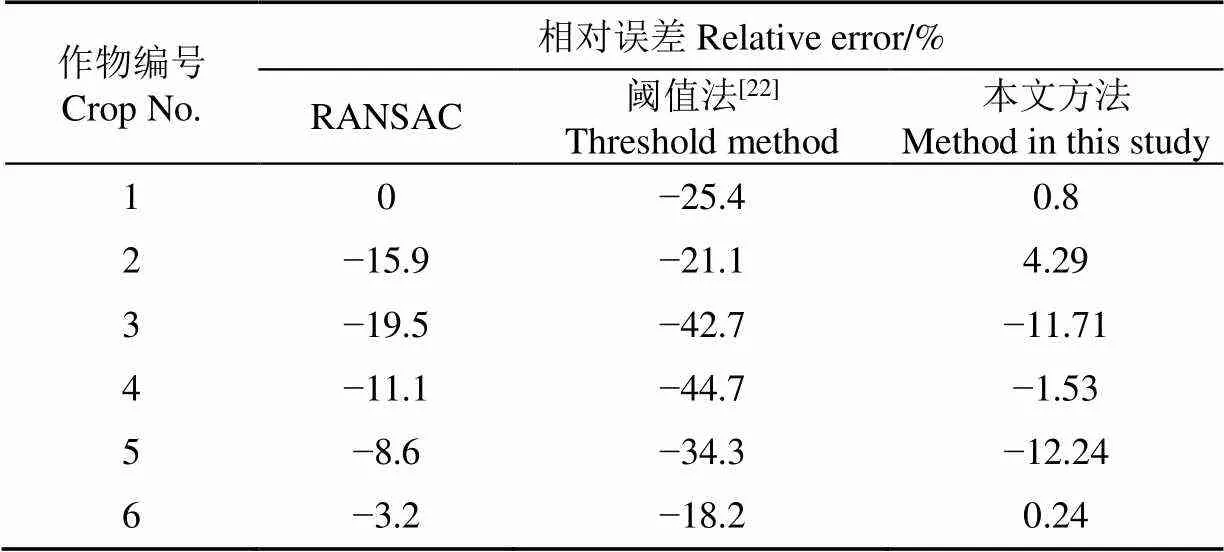

除了從相對誤差角度闡述植株高度計算方法的準確性,本文還對比了RANSAC和閾值法[22]2種方法,結果如表5所示。使用RANSAC算法和閾值法平均相對誤差分別為9.72%和31.07%,高于本文高度計算方法的相對誤差。所以,使用本文方法可以較高精度計算作物高度。對一壟大豆植株的高度統計結果如圖9所示。

表5 3種植株高度計算方法對比結果

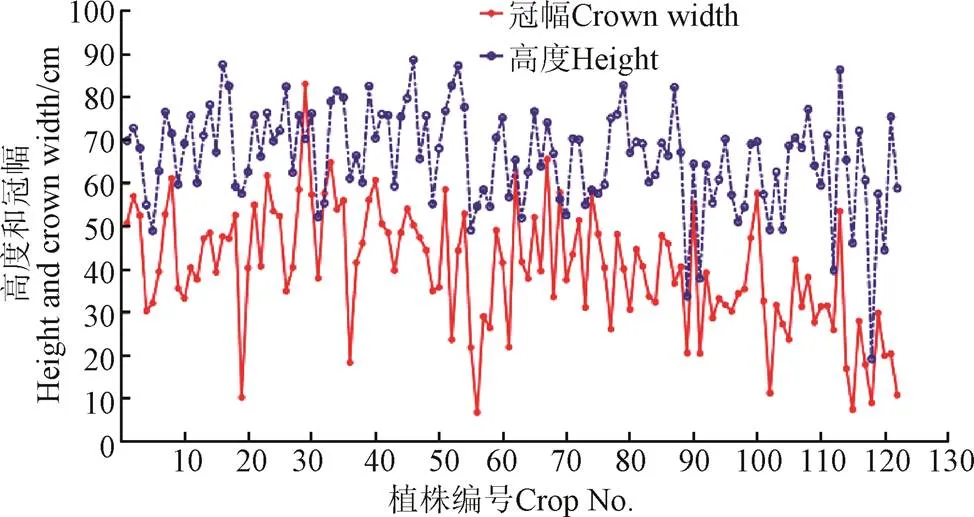

圖9 植株高度與冠幅

大豆植株平均高度為65.9 cm,標準差為11.6 cm,冠幅的平均尺寸為40.1 cm,標準差為14.1 cm。數據顯示植株高度與冠幅差異較大,可為變量農機提供決策信息。

2.4.2 植株體積結果

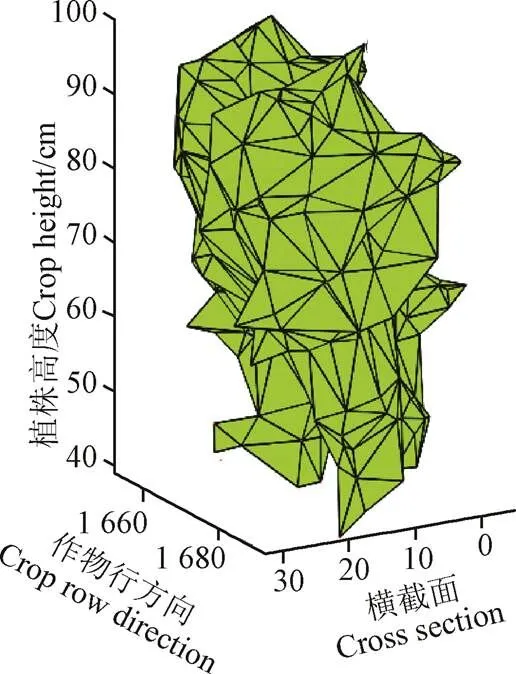

因定義冠層體積為外部輪廓所占的無空隙三維網格所占空間。所以在無空洞的情況下,選取越小,形成的網格化體積越小,越接近于真實體積。本文以單株橙樹冠層體積凹度=0.75為基礎[34],尋找適合結莢期大豆植株冠層的凹度值。當=1.85時,alpha shape算法重建的無縫隙包絡認為是合理的表面重建,如圖10所示。

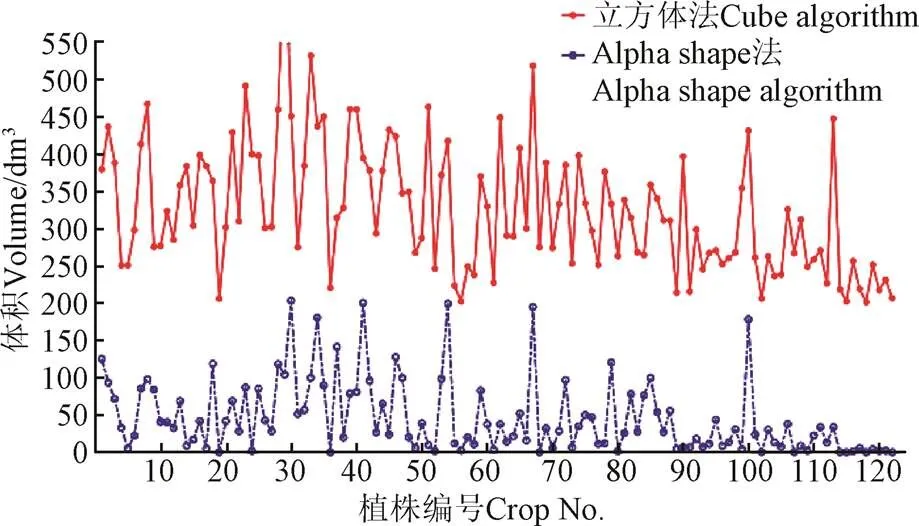

使用規則幾何體法(立方體法)估計作物體積,由于作物邊界與規則幾何體體間空隙大,造成作物體積被嚴重高估[36]。本文應用優化凹度值后的alpha shape算法重建了作物表面輪廓,減少了這種空隙的存在。

圖11顯示了立方體法和alpha shape算法對122棵大豆植株體積的統計結果。從圖中可以看出立方體法計算的體積遠大于alpha shape算法計算的體積,立方體法包絡形成的體積平均值為125.6 dm3,后者平均值為48.5 dm3,本文計算的體積較接近植株的實際情況。但是,由于沒有對大豆植株進行實際體積測量,未能將計算結果與實際測量結果進行對比,未來將對定量的分析合理凹度值下alpha shape算法所得體積與真實體積之間的關系做進一步研究。

圖10 作物表面重建結果

圖11 不同算法計算的單株植株體積

3 結 論

為了實現大田大豆單株植株高度與體積準確自動獲取,本文對基于大田大豆三維點云的單株植株高度與體積自動提取進行了研究,提出了以一種基于點云局部特征語義分割算法與均值漂移算法的單株高度與體積準確獲取的方法。結果表明:

1)本文構建的三維掃描探測系統可以提供前進運動方向、垂直運動方向、垂直地面方向分別為0.58%(5.8 cm)、?1.75%(?7.0 cm)、?1.74%(?3.4 cm)的測量精度。

2)基于2D和3D局部特征組合的方法,可以實現大田大豆植株與地面點云分類,分類效果指標AUC值為0.994。

3)在高度計算方法上,使用作物點云重心在二次擬合曲面(地面)上投影取平均值作為估計植株莖部與地面的交點計算作物高度,比直接選取高度最小值計算作物高度,平均相對誤差可由9.05%下降到5.14%。

4)本文應用優化凹度值后的alpha shape算法重建了作物表面輪廓,減少了這種空隙的存在。計算的植株體積平均值為48.5 dm3,與立方體法包絡法的結果相比,較接近植株的實際情況。

[1]潘瑜春,趙春江. 地理信息技術在精準農業中的應用[J]. 農業工程學報,2003,19(4):1-6.

Pan Yuchun, Zhao Chunjiang. Application of geographic information technologiesin precision agriculture[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2003, 19(4): 1-6. (in Chinese with English abstract)

[2]王萬章,洪添勝,李捷,等. 果樹農藥精確噴霧技術[J]. 農業工程學報,2004,20(6):98-101.

Wang Wanzhang, Hong Tiansheng, Li Jie, et al. Review of the pesticide precision orchard spraying technologies[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2004, 20(6): 98-101. (in Chinese with English abstract)

[3]邱白晶,閆潤,馬靖,等. 變量噴霧技術研究進展分析[J]. 農業機械學報,2015,46(12):59-72. Qiu Baijing, Yan Run, Ma Jing, et al. Research progress analysis of variable rate sprayer technology[J]. Transactions of the Chinese Society for Agricultural Machinery, 2015, 46(3): 59-72. (in Chinese with English abstract)

[4]劉建剛,趙春江,楊貴軍,等. 無人機遙感解析田間作物表型信息研究進展[J]. 農業工程學報,2016,32(24):98-106. Liu Jiangang, Zhao Chunjiang, Yang Guijun, et al. Review of field-based phenotyping by unmanned aerial vehicle remote sensing platform[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2016, 32(24): 98-106. (in Chinese with English abstract)

[5]Tumbo S D, Salyani M, Whitney J D, et al. Investigation of laser and ultrasonic ranging sensors for measurements ofcitrus canopy volume[J]. Transaction of the ASAE, 2002, 18(3): 367-372.

[6]Li Hanzhe, Zhai Changyuan,Weckler P, et al. A canopy density model for planar orchard target detection based on ultrasonic sensors[J]. Sensors, 2016, 17(1): 31-45.

[7]Maghsoudi H, Minaei S, Ghobadian B, et al. Ultrasonic sensing of pistachio canopy for low-volume precision spraying[J]. Computers and Electronics in Agriculture, 2015, 112: 149-160.

[8]Zou Wei, Wang Xiu, Deng Wei, et al. Design and test of automatic toward-target sprayer used in orchard[C]. sprayer used in orchard[C]. Shenyang: IEEE, 2015: 697-702.

[9]李麗,李恒,何雄奎,等. 紅外靶標自動探測器的研制及試驗[J]. 農業工程學報,2012,28(12):159-163. Li Li, Li Heng, He Xiongkui, et al. Development and experiment of automatic detection device for infrared target[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2012, 28(12): 159-163. (in Chinese with English abstract)

[10]劉明峰,胡先朋,廖宜濤,等. 不同油菜品種適栽期機械化移栽植株形態特征研究[J]. 農業工程學報,2015,31(增刊1):79-88. Liu Mingfeng, Hu Xianpeng, Liao Yitao, et al. Morphological parameters characteristics of mechanically transplanted plant in suitable transplanting period for different rape varieties[J]. Transactions of the Chinese Society of Agricultural Engineering(Transactions of the CSAE), 2015, 31(Supp.1): 79-88. (in Chinese with English abstract)

[11]王傳宇,杜建軍,郭新宇,等. 基于時間序列圖像的玉米植株干旱脅迫表型檢測方法[J]. 農業工程學報,2016,32(21):189-195. Wang Chuanyu, Du Jianjun, Guo Xinyu, et al. Maize crop drought stress phenotype testing method based on time-series images[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2016, 32(21): 189-195. (in Chinese with English abstract)

[12]Juliane B, Andreas B, Simon B, et al. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging[J]. Remote Sensing, 2014, 6(11): 10395-10412.

[13]孫智慧,陸聲鏈,郭新宇,等. 基于點云數據的植物葉片曲面重構方法[J]. 農業工程學報,2012,28(3):184-190. Sun Zhihui, Lu Shenglian, Guo Xinyu, et al. Surfaces reconstruction of crop leaves based on point cloud data[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2012, 28(3): 184-190. (in Chinese with English abstract)

[14]劉慧,李寧,沈躍,等. 模擬復雜地形的噴霧靶標激光檢測與三維重構[J]. 農業工程學報,2016,32(18):84-91. Liu Hui, Li Ning, Shen Yue, et al. Spray target laser scanning detection and three-dimensional reconstruction under simulated complex terrain[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2016, 32(18): 84-91. (in Chinese with English abstract)

[15]Babcock C, Finley A O, Andersen H E, et al. Geostatistical estimation of forest biomass in interior Alaska combining Landsat-derived tree cover, sampled airborne LiDAR and field observations[J]. Remote Sensing of Environment, 2018, 212: 212-230.

[16]Méndez V, Rosell-Polo J R, Pascual M, et al. Multi-tree woody structure reconstruction from mobile terrestrial laser scanner point clouds based on a dual neighbourhood connectivity graph algorithm[J]. Biosystems Engineering, 2016, 148: 34-47.

[17]郭彩玲,宗澤,張雪,等. 基于三維點云數據的蘋果樹冠層幾何參數獲取[J]. 農業工程學報,2017,33(3):175-181. Guo Cailing, Zong Ze, Zhang Xue, et al. Apple tree canopy geometric parameters acquirement based on 3D point clouds[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2017, 33(3): 175-181. (in Chinese with English abstract)

[18]Sanz R, Rosella J, Llorensb J, et al. Relationship between tree row LIDAR-volume and leaf area density for fruit orchards and vineyards obtained with a LIDAR 3D dynamic measurement system[J]. Agricultural and Forest Meteorology, 2013, 172(3): 153-162.

[19]Sanz R, Llorensb J, Escolà A, et al. LIDAR and non-LIDAR-based canopy parameters to estimate the leaf area in fruit trees and vineyard[J]. Agricultural and Forest Meteorology, 2018, 260: 229-239.

[20]劉慧,李寧,沈躍,等. 融合激光三維探測與IMU姿態角實時矯正的噴霧靶標檢測[J]. 農業工程學報,2017,33(15):88-97. Liu Hui, Li Ning, Shen Yue, et al. Spray target detection based on laser scanning sensor and real-time correction of IMU attitude angle[J]. Transactions of the Chinese Society of Agricultural Engineering(Transactions of the CSAE), 2017, 33(15): 88-97. (in Chinese with English abstract)

[21]Garrido M, Paraforos D, Reiser D, et al. 3D maize crop reconstruction based on georeferenced overlapping LiDAR point clouds[J]. Remote Sensing, 2015, 7(12): 17077-17096.

[22]Sun Shangpeng, Li Changying, Paterson A H, et al. In-field high throughput phenotyping and cotton crop growth analysis using LiDAR[J]. Frontiers in Crop Science, 2018, 9: 16-37.

[23]Escola A, Martínez-Casasnovas J A, Rufat J, et al. Mobile terrestrial laser scanner applications in precision fruticulture/ horticulture and tools to extract information from canopy point clouds[J]. Precision Agriculture, 2017, 18(1): 111-132.

[24]程曼,蔡振江,Ning Wang,等. 基于地面激光雷達的田間花生冠層高度探測系統研制[J]. 農業工程學報,2019,35(1):180-187. Cheng Man, Cai Zhenjiang, Wang Ning, et al. System design for peanut canopy height information acquisition based on LiDAR[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(1): 180-187. (in Chinese with English abstract)

[25]Martin C, Morten L, Rasmus J, et al. Designing and testing a UAV mapping system for agricultural field surveying[J]. Sensors, 2017, 17(12): 2703-2722.

[26]Ravi, R, Lin Y J, Shamseldin T, et al. Implementation of UAV-Based lidar for high throughput phenotyping[C]. Valencia: IEEE, 2018: 2018: 8761-8764.

[27]夏春華,施瀅,尹文慶. 基于TOF深度傳感的植物三維點云數據獲取與去噪方法[J]. 農業工程學報,2018,34(6):168-174.

Xia Chunhua, Shi Ying, Yin Wenqing. Obtaining and denoising method of three-dimensional point cloud data of plants based onTOF depth sensor[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2018, 34(6): 168-174. (in Chinese with English abstract)

[28]Dittrich A, Weinmann M, Hinz S. Analytical and numerical investigations on the accuracy and robustness of geometric features extracted from 3D point cloud data[J]. ISPRS Journal of Photogrammetry and Remote Sensing, 2017, 126: 195-208.

[29]Raileanu L E, Stoffel K. Theoretical comparison between the gini index and information gain criteria[J]. Annals of Mathematics and Artificial Intelligence, 2004, 41(1): 77-93.

[30]Spolaor N, Cherman E A, Monard M C, et al. ReliefF for multi-label feature selection[C]. Fortaleza: IEEE, 2013: 6-11.

[31]Ru? G, Brenning A. Data mining in precision agriculture: management of spatial information[C]. Berlin: Springer, 2010: 350-359.

[32]Bernardini F, Mittleman J, Rushmeier H, et al. The ball-pivoting algorithm for surface reconstruction[J]. IEEE Transactions on Visualization and Computer Graphics, 1999, 5(4): 349-359.

[33]Weiss U, Biber P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor[J]. Robotics and Autonomous Systems, 2011, 59(5): 265-273.

[34]Cola?o A, Trevisan R, Molin J, et al. A method to obtain orange crop geometry information using a mobile terrestrial laser scanner and 3D modeling[J]. Remote Sensing, 2017, 9(8): 763-784.

[35]Heinz E, Eling C, Wieland M, et al. Development, calibration and evaluation of a portable and direct georeferenced laser scanning system for kinematic 3D mapping[J]. Journal of Applied Geodesy, 2015, 9(4): 227-243.

[36]Yan Zhaojin, Liu Rufei, Cheng Liang, et al. A concave hull methodology for calculating the crown volume of individual trees based on vehicle-borne LiDAR data[J]. Remote Sensing. 2019, 11(6): 623-642.

Extraction of geometric parameters of soybean canopy by airborne 3D laser scanning

Guan Xianping1, Liu Kuan1, Qiu Baijing1※, Dong Xiaoya1, Xue Xinyu2

(1.,,,212013,; 2.,,210014,)

Accurate acquisition and analysis of crop geometric information is an important basis for the implementation of precision agriculture. Canopy height and volume are important decision parameters for variable sprayer application rate. In the field environment, the large change of ambient light has an important influence on the measurement of canopy geometry information by sensors. At the same time, there are few researches on the problems of remove the effect of ground roughness and difficulty in distinguishing individual plants due to branches and leaves crossing under the ridge planting mode of field soybean. Therefore, it is necessary to design an information acquisition system that is less affected by light conditions and an algorithm to improve the ability to extract geometric information from individual crops. In this study, a crop phenotype detection system based on airborne lidar was constructed and its accuracy was verified. A method of extracting individual plant based on local geometric feature segmentation and mean shift algorithm was proposed. In the process of soybean plant and ground classification, firstly, the local geometric features constructed in the optimal neighborhood are classified into 2D and 3D local shape features according to their dimensions. Secondly, in order to select 5 feature combinations that are strongly related to classification, all features were evaluated using Gini index algorithm, Chi-square algorithm, ReliefF algorithm, and random forest method. Finally, according to different feature combinations, a random forest classifier is selected to predict the test set data. In the process of extracting a single soybean plant, the point cloud data of different plants were used to obtain the point cloud data of a single plant using the mean shift algorithm to complete the extraction of a single soybean plant. In the process of obtaining geometric information of single plants, the height of plants was defined as the height difference from the intersection point of soybean stem and ground to the highest point of crops. In actual measurement, because the laser beam was blocked by branches and leaves, it is difficult to obtain the intersection point of soybean stem and ground, so the paper used the method of projecting the center of gravity of single point cloud to the ground fitting surface to estimate the intersection point. Furthermore, the plant height of single plant was obtained by subtracting the estimated intersection point from the maximum point. The experimental results showed that the maximum relative errors of the lidar scanning measurement system along the carrier moving direction, vertical moving direction and vertical ground direction were 0.58% (5.8 cm), ?1.75% (?7.0 cm) and ?1.74% (?3.4 cm), respectively. In the process of soybean crop and ground classification, the AUC (area under curve) value of the classification index ROC (receiver operating characteristic) curve was 0.994, achieving a good classification effect based on feature combination which was selected from 26 features using random forest algorithms. The relative error was 11.83% between the number of artificially counted plants and the number of manual measurements, and the distribution correlation was the highest with0.675 when the mean shift algorithm parameter is 20 cm. The average relative error of the height estimated method in this paper was 5.14%, which was better than RANSAC algorithm. This paper can provide reference for crop segmentation and yield statistics. Future research should focus on converting the obtained target crop information into a prescription map and storing it in a server for application in online spraying.

laser; extraction; data processing; point cloud of single crop; geometric parameters

管賢平,劉 寬,邱白晶,董曉婭,薛新宇. 基于機載三維激光掃描的大豆冠層幾何參數提取[J]. 農業工程學報,2019,35(23):96-103.doi:10.11975/j.issn.1002-6819.2019.23.012 http://www.tcsae.org

Guan Xianping, Liu Kuan, Qiu Baijing, Dong Xiaoya, Xue Xinyu. Extraction of geometric parameters of soybean canopy by airborne 3D laser scanning[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2019, 35(23): 96-103. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2019.23.012 http://www.tcsae.org

2019-05-11

2019-10-07

國家重點研發計劃項目課題(2017YFD0701403)

管賢平,副研究員,主要從事精確施藥技術研究。Email:xpguan@ujs.edu.cn

邱白晶,教授,博士生導師,主要從事農業植保機械領域研究。Email:qbj@ujs.edu.cn

10.11975/j.issn.1002-6819.2019.23.012

S24

A

1002-6819(2019)-23-0096-08