基于GF-6時(shí)序數(shù)據(jù)的農(nóng)作物識(shí)別深度學(xué)習(xí)算法評(píng)估

陳詩(shī)揚(yáng),劉 佳

基于GF-6時(shí)序數(shù)據(jù)的農(nóng)作物識(shí)別深度學(xué)習(xí)算法評(píng)估

陳詩(shī)揚(yáng),劉 佳※

(中國(guó)農(nóng)業(yè)科學(xué)院農(nóng)業(yè)資源與農(nóng)業(yè)區(qū)劃研究所,北京 100081)

農(nóng)作物類(lèi)型制圖是農(nóng)情遙感的重要內(nèi)容。該研究利用GF-6時(shí)序數(shù)據(jù),在黑龍江省對(duì)基于卷積、遞歸和注意力3種機(jī)制的6個(gè)深度學(xué)習(xí)模型在農(nóng)作物類(lèi)型制圖中的性能進(jìn)行了定性和定量的評(píng)估。結(jié)果表明:所有模型對(duì)大豆、玉米和水稻3類(lèi)主要農(nóng)作物的1值不低于89%、84%和97%,總體分類(lèi)精度達(dá)到了93%~95%。將模型異地遷移后,各模型的總體分類(lèi)精度下降7.2%~41.0%,基于卷積或遞歸的深度學(xué)習(xí)模型仍保持了較強(qiáng)的農(nóng)作物識(shí)別能力,優(yōu)于基于注意力的深度學(xué)習(xí)模型和隨機(jī)森林模型。在時(shí)間消耗上,各深度學(xué)習(xí)模型相比于隨機(jī)森林模型,訓(xùn)練與推理時(shí)間不超過(guò)6.2倍。GF-6時(shí)序數(shù)據(jù)結(jié)合深度學(xué)習(xí)模型在分類(lèi)精度和運(yùn)行效率上滿(mǎn)足高精度大范圍農(nóng)作物制圖的需要,且遷移性?xún)?yōu)于傳統(tǒng)模型。研究結(jié)果可為深度學(xué)習(xí)在黑龍江農(nóng)作物遙感分類(lèi)任務(wù)中的應(yīng)用提供參考。

農(nóng)作物;遙感;識(shí)別;深度學(xué)習(xí);GF-6;時(shí)間序列;黑龍江

0 引 言

基于中高空間分辨率遙感數(shù)據(jù)的農(nóng)作物類(lèi)型制圖是農(nóng)業(yè)監(jiān)測(cè)業(yè)務(wù)中最重要的管理工具之一。黑龍江省是中國(guó)糧食主產(chǎn)區(qū),在糧食安全中占有重要地位,及時(shí)準(zhǔn)確地掌握黑龍江農(nóng)作物種植分布及面積,對(duì)農(nóng)作物估產(chǎn)和農(nóng)業(yè)生產(chǎn)政策的制定具有重要意義。近年來(lái),GF-6衛(wèi)星穩(wěn)定在軌運(yùn)行并以4 d的重訪周期不斷提供優(yōu)質(zhì)的有效數(shù)據(jù),包含更多農(nóng)作物生育時(shí)期的時(shí)間序列數(shù)據(jù)在提高農(nóng)作物識(shí)別精度[1]的同時(shí)也使數(shù)據(jù)處理量成倍增加,因此尋找高效高能的機(jī)器學(xué)習(xí)分類(lèi)算法變得更為重要。國(guó)內(nèi)外許多學(xué)者已對(duì)遙感農(nóng)作物分類(lèi)進(jìn)行了大量研究,但使用的最小距離、支持向量機(jī)、隨機(jī)森林(Random Forest,RF)等傳統(tǒng)分類(lèi)方法存在以下問(wèn)題:首先,難以提取到深層次的特征,特征提取都為層次較低的單一或少量的淺層特征,其次,傳統(tǒng)方法只能在特定的區(qū)域、時(shí)間下使用,遷移性差[2]。近年來(lái),利用深度學(xué)習(xí)方法分析時(shí)間序列遙感數(shù)據(jù)的方法迅速增加。深度學(xué)習(xí)算法的運(yùn)用為復(fù)雜數(shù)據(jù)的分析提供了有效支持,特別是,卷積神經(jīng)網(wǎng)絡(luò)(Convolutional Neural Network,CNN)[3]和遞歸神經(jīng)網(wǎng)絡(luò)(Recurrent Neural Network,RNN)[4]已被證明可以有效探索空間結(jié)構(gòu)與時(shí)間結(jié)構(gòu),并應(yīng)用于農(nóng)作物遙感識(shí)別中。

卷積神經(jīng)網(wǎng)絡(luò)已廣泛應(yīng)用于各種遙感任務(wù),包括超高分辨率影像的地物分類(lèi)[5-6]、語(yǔ)義分割[7]、對(duì)象檢測(cè)[8]、數(shù)據(jù)插補(bǔ)[9]和融合[10]等,在這些工作中,CNN通過(guò)在不同維度應(yīng)用卷積來(lái)充分利用數(shù)據(jù)的空間結(jié)構(gòu)或時(shí)間結(jié)構(gòu)。對(duì)于地物分類(lèi),CNN包括跨越光譜或時(shí)間維度的1D-CNN[11-12]、跨越空間維度的2D-CNN[13]、跨越光譜和空間維度的3D-CNN[14]以及跨越時(shí)間和空間維度的3D-CNN[15]等。盡管1D-CNN已在時(shí)間序列分類(lèi)中廣泛應(yīng)用[16],但直到近年才在土地覆蓋制圖領(lǐng)域有所應(yīng)用[17],如Pelletier等[12]開(kāi)發(fā)了一種在時(shí)域應(yīng)用卷積的1D-CNN,以便定性和定量地評(píng)估網(wǎng)絡(luò)結(jié)構(gòu)對(duì)農(nóng)作物制圖的影響。遞歸神經(jīng)網(wǎng)絡(luò)以序列數(shù)據(jù)為輸入,在前進(jìn)方向進(jìn)行遞歸并保持來(lái)自先前上下文的特征。RNN是時(shí)間序列分類(lèi)研究使用最多的體系結(jié)構(gòu),已成功運(yùn)用于時(shí)間序列光學(xué)數(shù)據(jù)[18-20]以及多時(shí)相合成孔徑雷達(dá)數(shù)據(jù)[21]的地物分類(lèi),如Campos-Taberner等[22]利用Sentinel-2數(shù)據(jù)和基于兩層雙向長(zhǎng)短期記憶網(wǎng)絡(luò)的RNN,在西班牙巴倫西亞省農(nóng)作物制圖中達(dá)到了98.7%的總體精度,并在時(shí)域和譜域中采用噪聲置換方法,評(píng)估了不同光譜和時(shí)間特征對(duì)分類(lèi)精度的影響。此外,也有學(xué)者將CNN與RNN結(jié)合,用于農(nóng)作物分類(lèi)任務(wù)[23]和遙感變化檢測(cè)任務(wù)[24-25]。注意力機(jī)制(Attention Mechanism,AM)[26]不同于傳統(tǒng)的CNN或RNN,僅由自注意力和前饋神經(jīng)網(wǎng)絡(luò)組成。在遙感圖像處理中,注意力機(jī)制已被用于改進(jìn)超高分辨率圖像分類(lèi)[27-28]以及捕獲空間與光譜的依賴(lài)關(guān)系[29]。Ru?wurm等[23]提出了一種具有卷積遞歸層的編碼器結(jié)構(gòu),并引入注意力機(jī)制利用Sentinel-2時(shí)間序列數(shù)據(jù)在德國(guó)巴伐利亞進(jìn)行了農(nóng)作物分類(lèi)試驗(yàn),定性地展示了自注意力如何提取與分類(lèi)相關(guān)的特征。

近年來(lái),研究人員已對(duì)農(nóng)作物分類(lèi)算法進(jìn)行了廣泛的研究,但對(duì)GF-6衛(wèi)星寬視場(chǎng)(GF-6 Satellite Wide Field of View,GF-6/WFV)相機(jī)時(shí)間序列數(shù)據(jù)的利用較少,沒(méi)有在農(nóng)作物遙感分類(lèi)中發(fā)揮GF-6/WFV高時(shí)間分辨率的優(yōu)勢(shì)。此外,多數(shù)農(nóng)作物分類(lèi)研究所使用的深度學(xué)習(xí)模型基于計(jì)算機(jī)視覺(jué)領(lǐng)域的語(yǔ)義分割模型,缺少對(duì)時(shí)間序列領(lǐng)域深度學(xué)習(xí)模型的利用,后者可識(shí)別農(nóng)作物不同生育時(shí)期提供的獨(dú)特時(shí)間信號(hào),作為區(qū)分各種類(lèi)型農(nóng)作物的關(guān)鍵特征來(lái)構(gòu)建判別函數(shù)。本文擬針對(duì)GF-6/WFV時(shí)間序列數(shù)據(jù)和時(shí)間序列深度學(xué)習(xí)模型,評(píng)估與比較卷積神經(jīng)網(wǎng)絡(luò)、遞歸神經(jīng)網(wǎng)絡(luò)、注意力機(jī)制以及傳統(tǒng)算法在黑龍江農(nóng)作物分類(lèi)制圖中的性能表現(xiàn),為深度學(xué)習(xí)在農(nóng)作物遙感分類(lèi)中的應(yīng)用提供參考。

1 材料與方法

1.1 研究區(qū)概況

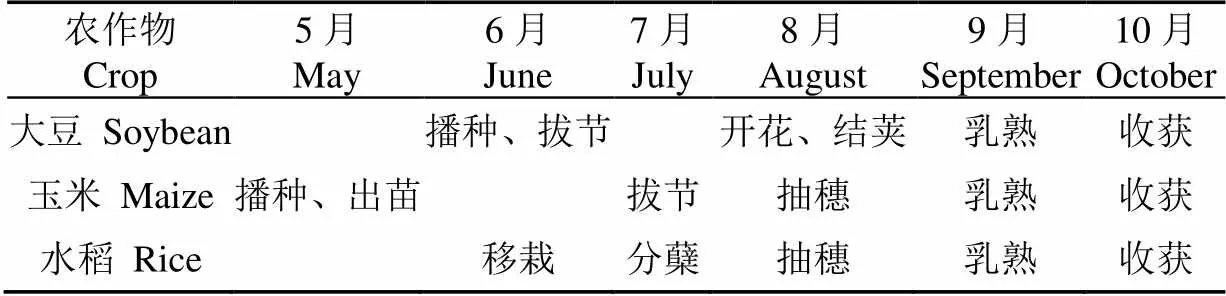

選擇黑龍江省林甸縣和海倫市作為研究區(qū)域,兩地主要農(nóng)作物均為水稻、玉米和大豆,種植結(jié)構(gòu)在黑龍江省平原地區(qū)具有代表性,適用于模型遷移性評(píng)估。研究區(qū)主要農(nóng)作物物候歷如表1所示。研究區(qū)位于松嫩平原東北端,屬北溫帶大陸季風(fēng)性氣候,年平均氣溫4℃,無(wú)霜期120 d左右,年降水量400~600 mm。其中,林甸縣縣域面積3 503 km2,耕地面積約為166 000 hm2;海倫市東距林甸縣145 km,幅員面積4 667 km2,耕地面積約為294 000 hm2。研究區(qū)在黑龍江省的具體位置如圖1所示,GF-6/WFV假彩色合成影像如圖2所示。

表1 研究區(qū)主要農(nóng)作物物候歷

1.2 遙感數(shù)據(jù)獲取及處理

GF-6/WFV空間分辨率16 m,觀測(cè)幅寬800 km,重訪周期4 d,較其他“高分”衛(wèi)星新增紅邊、黃等波段,是國(guó)內(nèi)首顆精準(zhǔn)農(nóng)業(yè)觀測(cè)的高分衛(wèi)星,其光譜響應(yīng)函數(shù)如圖3所示。

注:綠框區(qū)域用于目視分析。

Note: Green square areas were analyzed visually.

圖2 研究區(qū)GF-6/WFV假彩色(近紅外,紅,綠)影像

Fig.2 GF-6/WFV image in false color (near infra-red, red, green) composite of study areas

為提取3類(lèi)農(nóng)作物不同生育時(shí)期的分類(lèi)特征,數(shù)據(jù)獲取時(shí)間為2020年4月初至11月初。林甸研究區(qū)和海倫研究區(qū)所用時(shí)間序列數(shù)據(jù)分別由41景和48景GF-6/WFV數(shù)據(jù)組合而成,如圖4所示,研究區(qū)大部分區(qū)域均有35景以上的高質(zhì)量晴空數(shù)據(jù)覆蓋。

數(shù)據(jù)預(yù)處理過(guò)程包括輻射定標(biāo)、大氣表觀反射率計(jì)算、6S大氣校正、RPC(Rational Polynomial Coefficient)校正,預(yù)處理相關(guān)代碼存放于在線倉(cāng)庫(kù)https://github.com/GenghisYoung233/Gaofen-Batch。設(shè)定目標(biāo)時(shí)間序列長(zhǎng)度,長(zhǎng)度不足的像元隨機(jī)復(fù)制部分時(shí)域或全部丟棄,長(zhǎng)度超出的像元隨機(jī)丟棄部分時(shí)域,以解決部分?jǐn)?shù)據(jù)未覆蓋研究區(qū)以及兩個(gè)研究區(qū)時(shí)間序列長(zhǎng)度不一致無(wú)法遷移的問(wèn)題。最終,兩個(gè)研究區(qū)形成長(zhǎng)度為35景的時(shí)間序列數(shù)據(jù)。

對(duì)時(shí)間序列數(shù)據(jù)采用全局最大/最小值歸一化,以減少網(wǎng)絡(luò)隱藏層數(shù)據(jù)分布的改變對(duì)神經(jīng)網(wǎng)絡(luò)參數(shù)訓(xùn)練的影響, 從而加快神經(jīng)網(wǎng)絡(luò)的收斂速度和穩(wěn)定性。對(duì)于插值后時(shí)間序列數(shù)據(jù),將所有時(shí)相的第一波段的所有像元作為整體,去除2%極端值后得到最大值與最小值,對(duì)第一波段所有像元進(jìn)行歸一化,依次類(lèi)推,對(duì)所有8個(gè)波段進(jìn)行歸一化。不同于Z-score歸一化,結(jié)果保留了數(shù)據(jù)中對(duì)農(nóng)作物識(shí)別中起到關(guān)鍵作用的量綱與量級(jí)信息,保留了時(shí)間序列的變化趨勢(shì),并避免了極端值的影響。

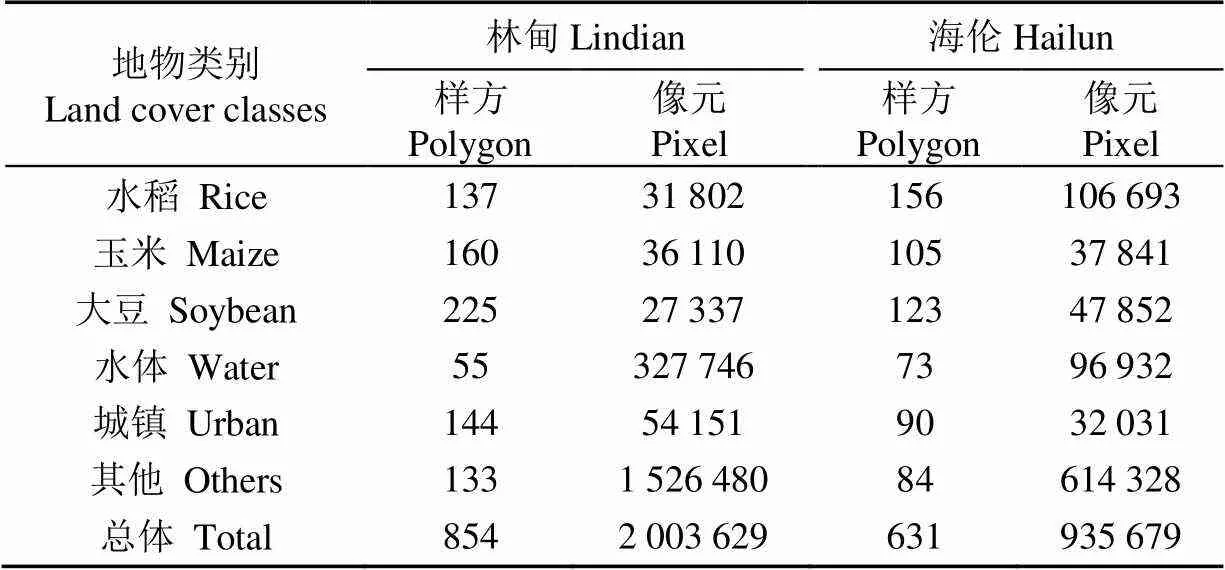

1.3 地表真實(shí)數(shù)據(jù)獲取

地表真實(shí)數(shù)據(jù)根據(jù)地面調(diào)查數(shù)據(jù)結(jié)合GF-2高分辨率數(shù)據(jù)目視解譯結(jié)果獲得。在地面調(diào)查中,利用安裝奧維地圖軟件的移動(dòng)設(shè)備記錄每個(gè)樣點(diǎn)的位置與地物類(lèi)型,地物類(lèi)型包括大豆、水稻、玉米、雜糧等農(nóng)作物和水體、城鎮(zhèn)、草地等非農(nóng)作物。然后通過(guò)GF-2數(shù)據(jù)目視解譯獲取樣點(diǎn)所在地塊的邊界,繪制樣方。最終,將樣方數(shù)據(jù)分為水稻、玉米、大豆、水體、城鎮(zhèn)和其他(草地、林地等自然地物)6類(lèi)。其中林甸共854個(gè)樣方,覆蓋像元2 003 629個(gè),海倫共631個(gè)樣方,覆蓋像元935 679個(gè),如表2所示。

表2 各地物類(lèi)別在樣方與像元級(jí)別的實(shí)例數(shù)量

從林甸縣地面數(shù)據(jù)中對(duì)每個(gè)地物類(lèi)別隨機(jī)選取70%的樣方,從中提取所有的像元及其對(duì)應(yīng)的地物類(lèi)別作為模型訓(xùn)練數(shù)據(jù)集,從其余30%樣方中對(duì)每一類(lèi)地物隨機(jī)抽取1 000個(gè)像元及其對(duì)應(yīng)的地物類(lèi)別作為模型驗(yàn)證數(shù)據(jù)集,各類(lèi)地物的像元數(shù)量保持均衡以避免對(duì)精度驗(yàn)證產(chǎn)生較大干擾。該過(guò)程重復(fù)5次,生成5組不同的訓(xùn)練/驗(yàn)證集供后續(xù)試驗(yàn)需要,各組數(shù)據(jù)集相互獨(dú)立。海倫研究區(qū)生成方法相同,后續(xù)試驗(yàn)僅使用驗(yàn)證數(shù)據(jù)集。

1.4 模型介紹

本試驗(yàn)使用了基于卷積、基于遞歸和基于注意力3種機(jī)制的6個(gè)深度學(xué)習(xí)模型以及作為基準(zhǔn)的隨機(jī)森林模型,均為農(nóng)作物遙感分類(lèi)中的常用模型或最新模型。試驗(yàn)相關(guān)代碼存放于在線倉(cāng)庫(kù)https://github.com/GenghisYoung233/ DongbeiCrops。

針對(duì)基于卷積的分類(lèi)機(jī)制,本文選擇了3種不同的卷積神經(jīng)網(wǎng)絡(luò)模型,分別是TempCNN(Temporal Convolutional Neural Network)[12]、MSResNet(Multi Scale 1D Residual Network)和OmniscaleCNN(Omniscale Convolutional Neural Network)[30]。TempCNN堆疊了3個(gè)具有相同卷積濾波器大小的卷積層,其后跟隨著全連接層和softmax激活層。MSResNet首先連接一個(gè)卷積層與最大池化層,并向3個(gè)分支傳遞,通過(guò)6個(gè)不同長(zhǎng)度的連續(xù)卷積濾波器和全局池化層。各個(gè)分支中,每3個(gè)卷積層中間加入ResNet殘差網(wǎng)絡(luò)連接以解決梯度消失或梯度爆炸的問(wèn)題。最后級(jí)聯(lián)各個(gè)分支的結(jié)果并通過(guò)完全連接層和softmax激活層。OmniscaleCNN由3個(gè)卷積層、全局池化層和softmax激活層組成。

針對(duì)基于遞歸的分類(lèi)機(jī)制,本文選擇了兩種不同的遞歸神經(jīng)網(wǎng)絡(luò)模型:LSTM(Long Short-Term Memory)[31]和StarRNN(Star Recurrent Neural Network)[32]。LSTM單元由記憶細(xì)胞、輸入門(mén)、輸出門(mén)和忘記門(mén)組成,記憶細(xì)胞負(fù)責(zé)存儲(chǔ)歷史信息,3個(gè)門(mén)控制著進(jìn)出單元的信息流。StarRNN與LSTM或門(mén)控循環(huán)單元(Gated Recurrent Unit,GRU)相比所需要的參數(shù)更少,并對(duì)梯度消失問(wèn)題進(jìn)行了優(yōu)化。

針對(duì)基于注意力的分類(lèi)機(jī)制,本文選擇了Transformer模型[26]。Transformer模型作為序列到序列、編碼器-解碼器模型,最初用于自然語(yǔ)言翻譯,對(duì)于遙感農(nóng)作物識(shí)別,本試驗(yàn)僅保留編碼器。

針對(duì)傳統(tǒng)分類(lèi)算法,本文選擇了隨機(jī)森林。隨機(jī)森林利用bootsrap重抽樣方法從原始樣本中抽取多個(gè)樣本,對(duì)每個(gè)bootsrap樣本進(jìn)行決策樹(shù)建模,然后組合多棵決策樹(shù)的預(yù)測(cè),通過(guò)投票得出最終預(yù)測(cè)結(jié)果[33]。隨機(jī)森林算法可以處理時(shí)間序列數(shù)據(jù)的高維度特征、易于調(diào)整參數(shù)[34]、對(duì)于錯(cuò)誤標(biāo)簽數(shù)據(jù)具有魯棒性[35],目前與支持向量機(jī)算法在傳統(tǒng)算法中占主導(dǎo)地位。

1.5 評(píng)估過(guò)程

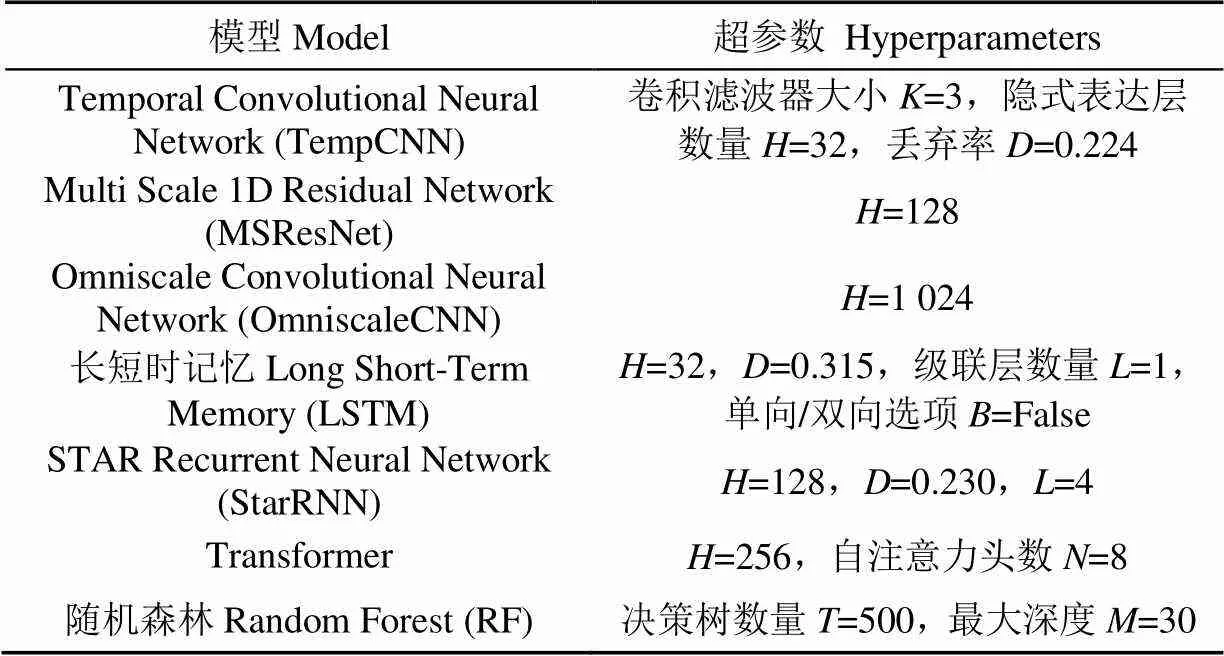

模型架構(gòu)和訓(xùn)練過(guò)程依賴(lài)于各種超參數(shù),這些超參數(shù)可能根據(jù)目標(biāo)和數(shù)據(jù)集而有所不同。對(duì)于深度學(xué)習(xí)模型,這些超參數(shù)包括隱藏向量的維數(shù)、層數(shù)、自注意力頭數(shù)、卷積核大小、丟棄率、學(xué)習(xí)速率和權(quán)值衰減率等,通過(guò)對(duì)模型的不同超參數(shù)分別設(shè)計(jì)多種選項(xiàng)并排列組合,進(jìn)行參數(shù)優(yōu)選。對(duì)于TempCNN,超參數(shù)選項(xiàng)為卷積濾波器大小(convolution filter size)、隱式表達(dá)層數(shù)量(number of hidden layer)、丟棄率(dropout rate)(選擇5個(gè),呈對(duì)數(shù)正態(tài)分布);對(duì)于MS-ResNet模型,超參數(shù)選項(xiàng)為;對(duì)于OmniscaleCNN,本文遵循模型作者的建議[30],不修改任何超參數(shù),為1 024;對(duì)于LSTM和StarRNN,超參數(shù)選項(xiàng)為、、級(jí)聯(lián)層數(shù)量(number of cascade layer),并針對(duì)LSTM,加入了單向/雙向(bidirectional)選項(xiàng);對(duì)于Transformer,超參數(shù)選項(xiàng)為、自注意力頭數(shù)(number of self-attention heads);對(duì)于RF,已有研究發(fā)現(xiàn)調(diào)整其參數(shù)只會(huì)帶來(lái)輕微的性能提升[34],故本文采用標(biāo)準(zhǔn)設(shè)置:決策樹(shù)數(shù)量(number of decision tree)為500、最大深度(maximum depth)為30。將各模型超參數(shù)組合后,得到不同超參數(shù)的TempCNN模型45個(gè),MS-ResNet模型5個(gè),OmniscaleCNN模型1個(gè),LSTM模型120個(gè),StarRNN模型60個(gè),Transfromer模型40個(gè),RF模型1個(gè)。對(duì)所有超參數(shù)組合,首先在林甸研究區(qū)訓(xùn)練數(shù)據(jù)集與驗(yàn)證數(shù)據(jù)集上進(jìn)行參數(shù)優(yōu)選,根據(jù)總體分類(lèi)精度選擇最佳參數(shù)組合。

確定最佳超參數(shù)組合后,首先在林甸研究區(qū)的訓(xùn)練數(shù)據(jù)集和驗(yàn)證數(shù)據(jù)集上進(jìn)行訓(xùn)練與驗(yàn)證,得到訓(xùn)練后模型、林甸分類(lèi)后影像與總體分類(lèi)精度、制圖精度、用戶(hù)精度、1值4種精度指標(biāo)。然后利用林甸訓(xùn)練后模型在海倫研究區(qū)的驗(yàn)證數(shù)據(jù)集上進(jìn)行遷移性測(cè)試,獲取海倫分類(lèi)后影像和精度指標(biāo)。為避免統(tǒng)計(jì)學(xué)誤差[36],該過(guò)程在5組不同的數(shù)據(jù)集上進(jìn)行評(píng)估,取其中值為最終結(jié)果。最后對(duì)所有模型最終的評(píng)估結(jié)果進(jìn)行比較與分析。

2 結(jié)果與分析

對(duì)各個(gè)超參數(shù)組合的訓(xùn)練結(jié)果進(jìn)行比對(duì)后確定最終結(jié)果,如表3所示。

表3 各模型最終超參數(shù)

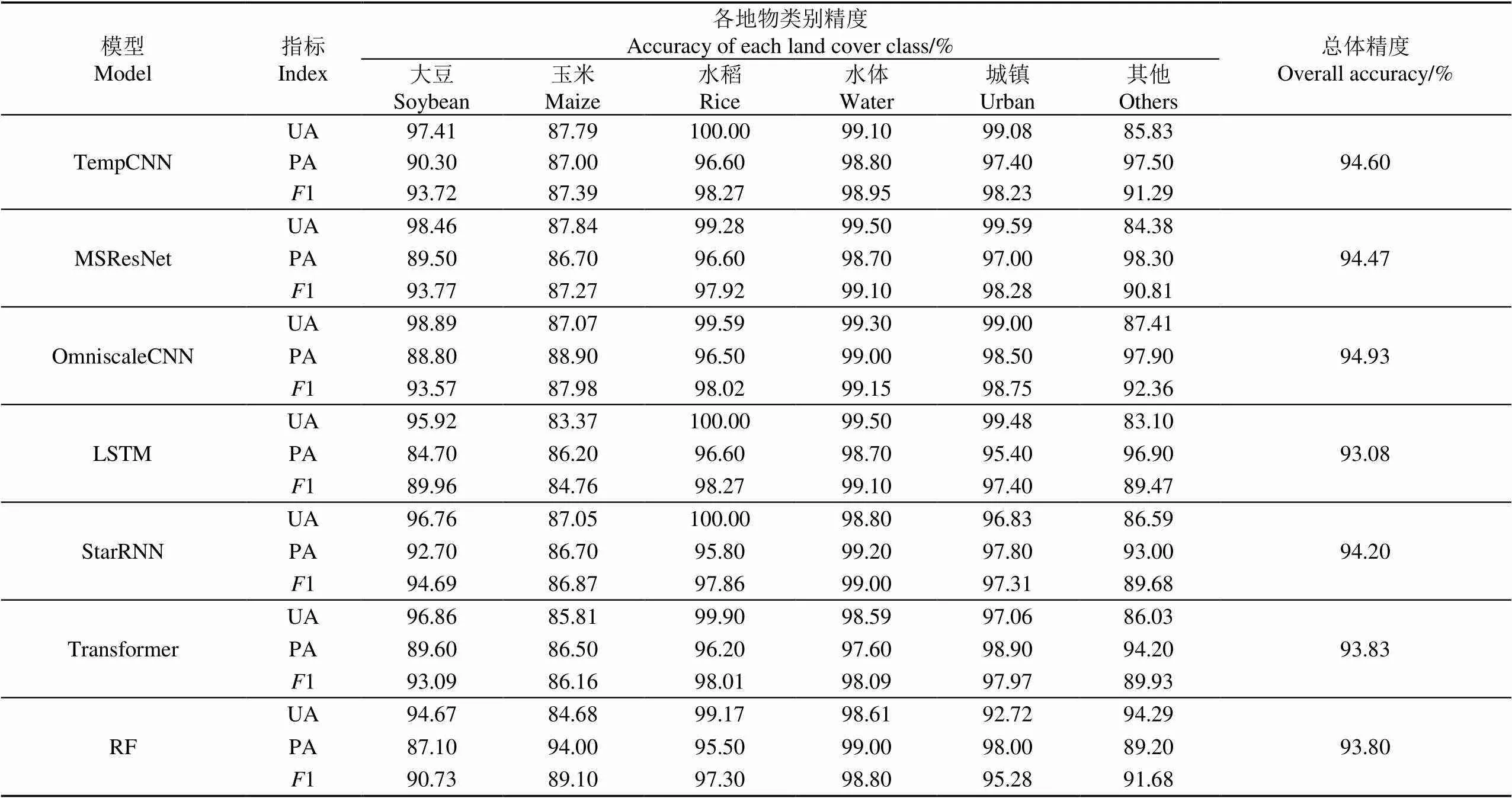

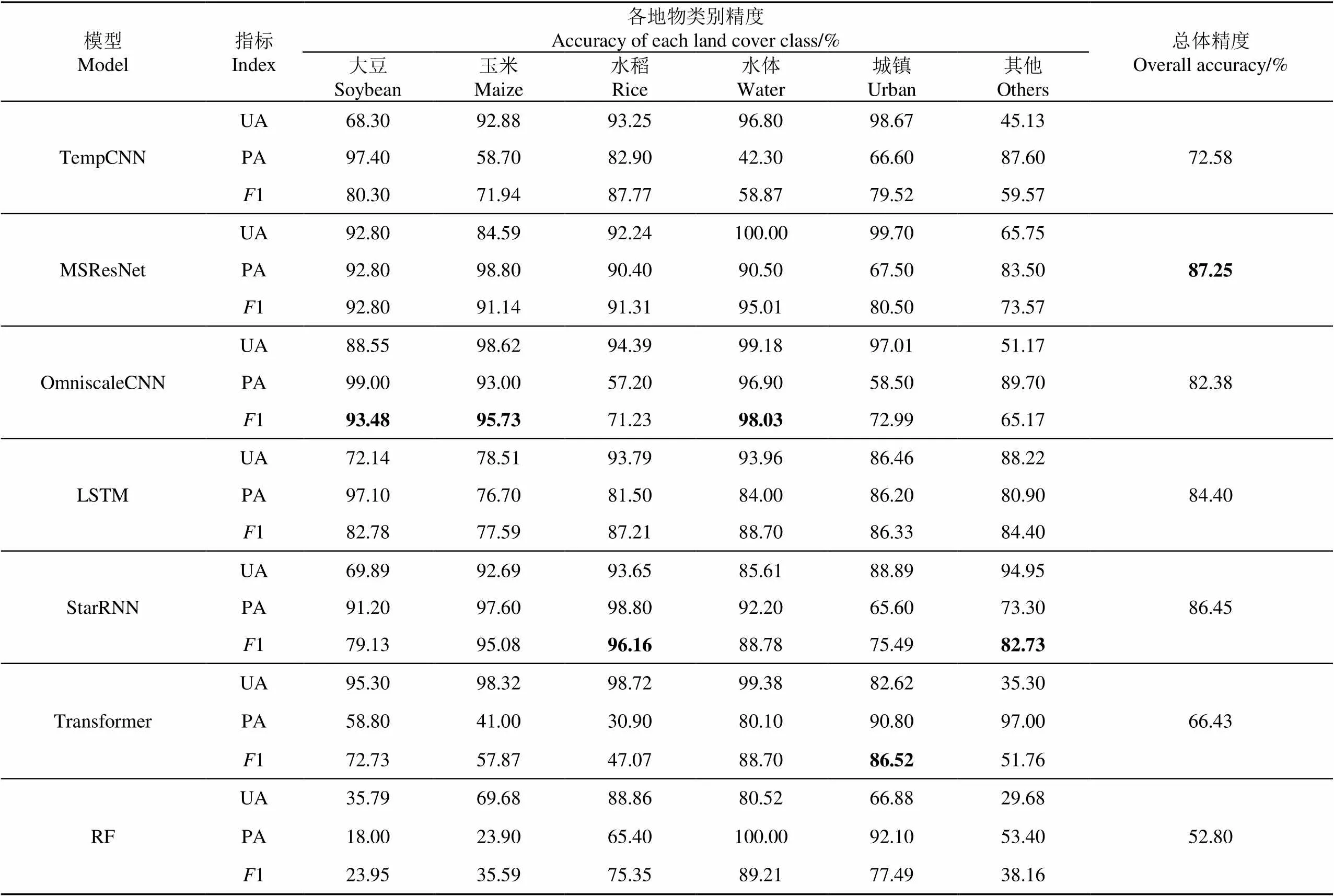

利用林甸訓(xùn)練數(shù)據(jù)集和驗(yàn)證數(shù)據(jù)集訓(xùn)練模型并對(duì)林甸研究區(qū)影像進(jìn)行分類(lèi),所有模型在綠框區(qū)域的分類(lèi)結(jié)果圖5a所示,模型經(jīng)林甸數(shù)據(jù)集訓(xùn)練后遷移至海倫,對(duì)海倫研究區(qū)的影像進(jìn)行分類(lèi),利用海倫驗(yàn)證數(shù)據(jù)集進(jìn)行精度驗(yàn)證,所有模型的分類(lèi)結(jié)果如圖5b所示。精度指標(biāo)如表4和表5所示。

表4 各模型在林甸的精度

注:UA、PA、1分別代表用戶(hù)精度、制圖精度、1值。下同。

Note: UA, PA and1 represent the user's accuracy, the producer's accuracy and the1 score. Same below.

在林甸研究區(qū),各模型的分類(lèi)結(jié)果在視覺(jué)上十分相似,都取得了93%~95%的總體分類(lèi)精度,對(duì)大豆、玉米和水稻3類(lèi)主要農(nóng)作物的1值均不低于89%、84%和97%,沒(méi)有表現(xiàn)出大的性能差異,較好地反映了3類(lèi)農(nóng)作物與其余地物的空間分布趨勢(shì)。存在的共同問(wèn)題是大豆與玉米像元的錯(cuò)分,由于大豆、玉米混種的情況較為常見(jiàn),GF-6/WFV數(shù)據(jù)較低的空間分辨率使得大豆與玉米像元容易混淆,同時(shí)因?yàn)榇蠖古c玉米生育期相近、光譜響應(yīng)相似,采用原始波段數(shù)據(jù)分離兩者的難度較大,導(dǎo)致大豆與玉米的制圖精度和用戶(hù)精度降低,需要提高遙感數(shù)據(jù)分辨率、添加植被指數(shù)波段來(lái)進(jìn)一步提高大豆、玉米的識(shí)別精度。

受樣本量較小、云污染、自然地物類(lèi)型和數(shù)據(jù)時(shí)相組成發(fā)生改變等因素影響,模型在遷移后出現(xiàn)了不同程度的過(guò)擬合現(xiàn)象,總體分類(lèi)精度下降7.2%~41.0%。由總體精度和1值可以看出,在卷積模型中,MSResNet對(duì)3類(lèi)農(nóng)作物均保持了較好的分類(lèi)性能,由于時(shí)間序列插值使得不同區(qū)域的像元具有不同的時(shí)相組成,MSResNet良好的擬合性或許是因?yàn)橄鄬?duì)較復(fù)雜的網(wǎng)絡(luò)結(jié)構(gòu)和較大的參數(shù)量使其具有更強(qiáng)的數(shù)據(jù)泛化能力。OmniScaleCNN在分類(lèi)過(guò)程中可根據(jù)數(shù)據(jù)特征自動(dòng)調(diào)整卷積濾波器大小,從而更好地從時(shí)間序列數(shù)據(jù)中捕獲分類(lèi)特征,遷移后對(duì)3類(lèi)農(nóng)作物的識(shí)別能力優(yōu)于卷積濾波器大小固定的TempCNN。遞歸模型中,LSTM和StarRNN通過(guò)引入門(mén)控單元對(duì)抗分類(lèi)過(guò)程中梯度爆炸和梯度消失的問(wèn)題,遷移后總體精度下降幅度均小于10%。基于注意力的Transformer對(duì)3類(lèi)農(nóng)作物的識(shí)別能力大幅下降,誤分錯(cuò)分明顯,或許是因?yàn)門(mén)ransformer依賴(lài)弱歸納偏置、缺少條件計(jì)算使其對(duì)超長(zhǎng)時(shí)間序列建模能力較差,導(dǎo)致模型在遷移后出現(xiàn)嚴(yán)重過(guò)擬合;RF由于在訓(xùn)練過(guò)程僅能提取少量淺層特征,遷移后精度損失最大。在其他類(lèi)中,林甸以草地為主,海倫以林地為主,所有模型對(duì)其他類(lèi)的識(shí)別能力都有所下降。綜上,所有模型在數(shù)據(jù)空間位置和時(shí)相組成不變的情況下均能較好地對(duì)像元進(jìn)行判斷、反映3類(lèi)農(nóng)作物的分布趨勢(shì);但在數(shù)據(jù)的空間位置與時(shí)相組成變化的情況下,只有部分基于卷積或遞歸的深度學(xué)習(xí)模型能夠反映各類(lèi)地物的大致分布趨勢(shì)。由于不同地區(qū)的農(nóng)作物種植結(jié)構(gòu)與地物類(lèi)型有所差異,該結(jié)論在其他地區(qū)的適用性仍有待論證。

表5 各模型在海倫的精度

對(duì)于計(jì)算效率,在搭載Inter Xeon 4114處理器和GeForce RTX 2080Ti顯卡的圖形工作站上,以訓(xùn)練模型并利用模型對(duì)覆蓋面積約10 000 km2的海倫研究區(qū)影像進(jìn)行推理作為全過(guò)程,各個(gè)深度學(xué)習(xí)模型與隨機(jī)森林模型的運(yùn)行時(shí)間比值均在6.2倍以?xún)?nèi),1 h內(nèi)即可完成全過(guò)程,如表6所示。此外,由于GF-6/WFV時(shí)間序列數(shù)據(jù)獲取與預(yù)處理較為耗時(shí),難以讓時(shí)間序列深度學(xué)習(xí)模型和GF-6/WFV數(shù)據(jù)在農(nóng)作物分類(lèi)中得到充分應(yīng)用,后續(xù)將優(yōu)化時(shí)序數(shù)據(jù)組合和波段組合以提高計(jì)算效率。

表6 各模型的運(yùn)行時(shí)間

3 結(jié) 論

GF-6是中國(guó)第一顆具備紅邊和黃等農(nóng)作物識(shí)別特征波段的中高空間分辨率衛(wèi)星,同時(shí)具有高時(shí)間分辨率的特點(diǎn)。本文定性和定量地評(píng)估了多個(gè)時(shí)間序列深度學(xué)習(xí)模型在GF-6/WFV時(shí)間序列數(shù)據(jù)農(nóng)作物識(shí)別中的性能,對(duì)基于卷積、遞歸和注意力機(jī)制的6個(gè)深度學(xué)習(xí)模型和隨機(jī)森林模型的分類(lèi)結(jié)果進(jìn)行了目視分析以及精度評(píng)價(jià),得出如下結(jié)論:

1)在空間位置不變的情況下,所有模型對(duì)大豆、玉米和水稻3類(lèi)主要農(nóng)作物的1值均不低于89%、84%和97%,表現(xiàn)出較強(qiáng)的農(nóng)作物識(shí)別能力,能滿(mǎn)足高精度農(nóng)作物制圖的業(yè)務(wù)需要。盡管卷積、遞歸和注意力機(jī)制間存在較大差別,各模型均取得了93%~95%的總體分類(lèi)精度,表明在不進(jìn)行空間位置遷移,即測(cè)試數(shù)據(jù)與訓(xùn)練數(shù)據(jù)來(lái)自于同一分布時(shí),農(nóng)作物識(shí)別精度與模型的分類(lèi)機(jī)制的相關(guān)性較小。

2)在空間位置變化的情況下,由于自然地物類(lèi)型和數(shù)據(jù)時(shí)相組成發(fā)生改變,各個(gè)模型的總體分類(lèi)精度下降了7.2%~41.0%。其中,基于卷積的MSResNet的農(nóng)作物識(shí)別能力沒(méi)有明顯變化,基于遞歸的LSTM和StarRNN總體精度下降幅度小于10%,而基于注意力的Transformer和隨機(jī)森林對(duì)3類(lèi)農(nóng)作物的識(shí)別能力都出現(xiàn)了明顯減弱,表明在空間位置遷移使得測(cè)試數(shù)據(jù)與訓(xùn)練數(shù)據(jù)處于不同分布后,分類(lèi)機(jī)制對(duì)農(nóng)作物識(shí)別精度影響較大,基于卷積或遞歸的模型優(yōu)于基于注意力的模型和隨機(jī)森林模型。該結(jié)論僅適用于黑龍江省平原地區(qū),其他地區(qū)仍需進(jìn)一步測(cè)試。

3)在時(shí)間消耗上,各個(gè)深度學(xué)習(xí)模型與隨機(jī)森林模型的運(yùn)行時(shí)間比值均在6.2倍以?xún)?nèi),在較短時(shí)間內(nèi)即可完成訓(xùn)練與推理的全過(guò)程。

基于3種機(jī)制可構(gòu)建大量不同網(wǎng)絡(luò)結(jié)構(gòu)的深度學(xué)習(xí)模型,分類(lèi)機(jī)制與農(nóng)作物識(shí)別能力的關(guān)系仍需進(jìn)一步探索。后續(xù)工作將側(cè)重于深度學(xué)習(xí)模型的可解釋性,研究各分類(lèi)機(jī)制如何選擇性地提取少數(shù)與農(nóng)作物識(shí)別相關(guān)的特征。

[1] 王鵬新,荀蘭,李俐,等. 基于時(shí)間序列葉面積指數(shù)稀疏表示的作物種植區(qū)域提取[J]. 遙感學(xué)報(bào),2018,24(5):121-129.

Wang Pengxin, Xun Lan, Li Li, et al. Extraction of planting areas of main crops based on sparse representation of time-series leaf area index[J]. Journal of Remote Sensing, 2018, 24(5): 121-129. (in Chinese with English abstract)

[2] 趙紅偉,陳仲新,劉佳. 深度學(xué)習(xí)方法在作物遙感分類(lèi)中的應(yīng)用和挑戰(zhàn)[J]. 中國(guó)農(nóng)業(yè)資源與區(qū)劃,2020,41(2):35-49.

Zhao Hongwei, Chen Zhongxin, Liu Jia. Deep learning for crop classification of remote sensing data: Applications and challenges[J]. Chinese Journal of Agricultural Resources and Regional Planning, 2020, 41(2): 35-49. (in Chinese with English abstract)

[3] Lecun Y, Bottou L. Gradient-based learning applied to document recognition[J]. Proceedings of the IEEE, 1998, 86(11):2278-2324.

[4] Hinton E, Williams J. Learning representations by back propagating errors[J]. Nature, 1986, 323(6088): 533-536.

[5] Maggiori E, Tarabalka Y, Charpiat G, et al. Convolutional neural networks for large-scale remote sensing image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2016, 55(2):645-657.

[6] Postadjian T, Bris L, Sahbi H, et al. Investigating the potential of deep neural networks for large-scale classification of very high resolution satellite images[J]. Remote Sensing and Spatial Information Sciences, 2017, 31(5): 11-25.

[7] 趙斐,張文凱,閆志遠(yuǎn),等. 基于多特征圖金字塔融合深度網(wǎng)絡(luò)的遙感圖像語(yǔ)義分割[J]. 電子與信息學(xué)報(bào),2019,41(10):44-50.

Zhao Fei, Zhang Wenkai, Yan Zhiyuan, et al. Multi-feature map pyramid fusion deep network for semantic segmentation on remote sensing data[J]. Journal of Electronics and Information Technology, 2019, 41(10): 44-50. (in Chinese with English abstract)

[8] 陳洋,范榮雙,王競(jìng)雪,等. 基于深度學(xué)習(xí)的資源三號(hào)衛(wèi)星遙感影像云檢測(cè)方法[J]. 光學(xué)學(xué)報(bào),2018,38(1):32-42.

Chen Yang, Fan Rongshuang, Wang Jingxue, et al. Cloud detection of ZY-3 satellite remote sensing images based on deep learning[J]. Acta Optica Sinica, 2018, 38(1): 32-42. (in Chinese with English abstract)

[9] Zhang Q, Yuan Q, Zeng C, et al. Missing data reconstruction in remote sensing image with a unified spatial-temporal-spectral deep convolutional neural network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 56(8):4274-4288.

[10] Ozcelik F, Alganci U, Sertel E, et al. Rethinking CNN-based pansharpening: Guided colorization of panchromatic images via GANS[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 59(4): 3486-3501.

[11] 趙紅偉,陳仲新,姜浩,等. 基于Sentinel-1A影像和一維CNN的中國(guó)南方生長(zhǎng)季早期作物種類(lèi)識(shí)別[J]. 農(nóng)業(yè)工程學(xué)報(bào),2020,36(3):169-177.

Zhao Hongwei, Chen Zhongxin, Jiang Hao, et al. Early growing stage crop species identification in southern China based on sentinel-1A time series imagery and one-dimensional CNN[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2020, 36(3): 169-177. (in Chinese with English abstract)

[12] Pelletier C, Webb G I, Petitjean F. Temporal convolutional neural network for the classification of satellite image time series[J]. Remote Sensing, 2019, 11(5): 3154-3166.

[13] Heming L, Qi L. Hyperspectral imagery classification using sparse representations of convolutional neural network features[J]. Remote Sensing, 2016, 8(2):99-110.

[14] Ji S, Zhang Z, Zhang C, et al. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images[J]. International Journal of Remote Sensing, 2020, 41(8): 3162-3174.

[15] Shunping J, Chi Z, Anjian X, et al. 3D convolutional neural networks for crop classification with multi-temporal remote sensing images[J]. Remote Sensing, 2018, 10(2):75-89.

[16] Ismail H, Forestier G, Weber J, et al. Deep learning for time series classification: A review[J]. Data Mining and Knowledge Discovery, 2019, 24(2): 57-69.

[17] Zhong L, Hu L, Zhou H. Deep learning based multi-temporal crop classification[J]. Remote Sensing of Environment, 2018, 221(3): 430-443.

[18] Ru?wurm M, Korner M. Temporal vegetation modelling using long short-term memory networks for crop identification from medium-resolution multi-spectral satellite images[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops. IEEE Computer Society, 2017: 11-19.

[19] 楊澤宇,張洪艷,明金,等. 深度學(xué)習(xí)在高分辨率遙感影像冬油菜提取中的應(yīng)用[J]. 測(cè)繪通報(bào),2020,522(9):113-116.

Yang Zeyu, Zhang Hongyan, Ming Jin, et al. Extraction of winter rapeseed from high-resolution remote sensing imagery via deep learning[J]. Bulletin of Surveying and Mapping, 2020, 522(9): 113-116. (in Chinese with English abstract)

[20] Ienco D, Gaetano R, Dupaquier C, et al. Land cover classification via multitemporal spatial data by deep recurrent neural networks[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(10): 1685-1689.

[21] Ndikumana E, Minh DHT, Baghdadi N, et al. Deep recurrent neural network for agricultural classification using multitemporal SAR Sentinel-1 for Camargue, France[J]. Remote Sensing, 2018, 10(8): 1217-1230.

[22] Campos-Taberner M, García-Haro F J, Martínez B, et al. Understanding deep learning in land use classification based on Sentinel-2 time series[J]. Scientific Reports, 2020, 10(1): 1-12.

[23] Ru?wurm M, K?rner M. Multi-temporal land cover classification with sequential recurrent encoders[J]. ISPRS International Journal of Geo-Information, 2018, 7(4): 129-143.

[24] Haobo L, Hui L, Lichao M. Learning a transferable change rule from a recurrent neural network for land cover change detection[J]. Remote Sensing, 2016, 8(6):506-513.

[25] Mou L, Bruzzone L, Zhu X X. Learning spectral-spatial- temporal features via a recurrent convolutional neural network for change detection in multispectral imagery[J]. IEEE Transactions on Geoscience and Remote Sensing, 2018, 57(2): 924-935.

[26] Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need[C]//Advances in Neural Information Processing Systems. NIPS, 2017: 5998-6008.

[27] Xu R, Tao Y, Lu Z, et al. Attention-mechanism-containing neural networks for high-resolution remote sensing image classification[J]. Remote Sensing, 2018, 10(10): 1602-1611.

[28] Liu R, Cheng Z, Zhang L, et al. Remote sensing image change detection based on information transmission and attention mechanism[J]. IEEE Access, 2019, 7: 156349-156359.

[29] Fu J, Liu J, Tian H, et al. Dual attention network for scene segmentation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2019: 3146-3154.

[30] Tang W, Long G, Liu L, et al. Rethinking 1d-cnn for time series classification: A stronger baseline[EB/OL]. (2021-01-12)[2021-04-20]https://arxiv.org/abs/2002.10061.

[31] Hochreiter S, Schmidhuber J. Long short-term memory[J]. Neural computation, 1997, 9(8): 1735-1780.

[32] Turkoglu M O, D'Aronco S, Wegner J, et al. Gating revisited: Deep multi-layer rnns that can be trained[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 8(12): 2145-2160.

[33] 吳見(jiàn)彬,朱建平,謝邦昌.隨機(jī)森林方法研究綜述[J]. 統(tǒng)計(jì)與信息論壇,2011,26(3):32-38.

Wu Jianbin, Zhu Jianping, Xie Bangchang. A review of technologies on random forests[J]. Journal of Statistics and Information, 2011, 26(3): 32-38. (in Chinese with English abstract)

[34] Pelletier C, Valero S, Inglada J, et al. Assessing the robustness of Random Forests to map land cover with high resolution satellite image time series over large areas[J]. Remote Sensing of Environment, 2016, 187: 156-168.

[35] Pelletier C, Valero S, Inglada J, et al. Effect of training class label noise on classification performances for land cover mapping with satellite image time series[J]. Remote Sensing, 2017, 9(2): 173-180.

[36] Lyons M B, Keith D A, Phinn S R, et al. A comparison of resampling methods for remote sensing classification and accuracy assessment[J]. Remote Sensing of Environment, 2018, 208: 145-153.

Evaluation of deep learning algorithm for crop identification based on GF-6 time series images

Chen Shiyang, Liu Jia※

(,,100081,)

Crop type mapping is one of the most important tools with medium and high spatial resolution satellite images in monitoring services of modern agriculture. Taking Heilongjiang Province of northeast China as a study area, this study aims to evaluate the state-of-the-art deep learning in crop type classification. A comparison was made on the Convolution Neural Network (CNN), Recurrent Neural Network (RNN), and Attention Mechanism (AM) for the application in crop type classification, while the traditional random forest (RF) model was also used as the control. Six models of deep learning were Temporal Convolutional Neural Network (TempCNN), Multi Scale 1D Residual Network (MSResNet), Omniscale Convolutional Neural Network (OmniscaleCNN), Long Short-Term Memory (LSTM), STAR Recurrent Neural Network (StarRNN), and Transformer. The specific procedure was as follows. First, GF-6 wide-field view image time series was acquired between April and November in the Lindian and Hailun study area, northeast of China, in order to extract the features of three types of crops at different growth stages. The resulting image time series used in the Lindian and the Hailun was composed of 41 and 48 GF-6 images, respectively. The preprocessing workflow included RPC correction, radiometric calibration, convert to top-of-atmospheric and surface reflectance using 6S atmospheric correction. The image interpolation and global min-max normalization were also applied to fill the empty pixel, further improving the convergence speed and stability of neural networks. The ground truth data was manually labelled using a field survey combined with GF-2 high-resolution image to generate datasets for train and evaluation. The datasets included six crops, such as rice, maize, soybean, water, urban and rest, covering 2 003 629 pixels in Lindian, 935 679 pixels in Hailun. Second, all models were trained and evaluated in Lindian, according to the differences between CNN, RNN, AM, and RF. All models achieved an overall accuracy of 93%-95%, and1-score above 89%, 84%, and 97% for soybean, maize, and rice, respectively, where three major crops were from both study areas. Thirdly, the trained model in Lindian was transferred to that in Hailun, where the overall classification accuracy of each model declined between 7.2% to 41.0%, due to the differences of land cover classes and temporal composition of the data. Among CNNs, the accuracy of MSResNet barely changed to recognize three types of crops after transfer. Since OmniScaleCNN was automatically adjusted the size of the convolution filter, the accuracy of OmniScaleCNN after the transfer was better than that of TempCNN. A forget gate was utilized in the LSTM and StarRNN among RNNs, in order to avoid gradient explosion and disappearance in the classification, where the overall accuracy declined less than 10% after transfer. However, the accuracy of attention-based Transformer and RF dropped significantly. All models performed better on the distribution of three types of crops under the condition that the spatial location and temporal composition of data remain unchanged, in terms of visual analysis of classified images. Two CNN or RNN models were expected to accurately identify the general distribution of all land cover classes, under the varying spatial location and temporal composition. Furthermore, the run time of each deep learning was within 1 h, less than 6.2 times of random forest. Time consumption in the whole process was associated with the model training, as well as the image treatment for the Hailun study area covering an area of about 10 000 km2. Correspondingly, deep learning presented a high-precision and large-scale crop mapping, in terms of classification accuracy and operating efficiency, particularly that the transfer learning performed better than before.

crops; remote sensing; recognition; deep learning; GF-6; time series; Heilongjiang

陳詩(shī)揚(yáng),劉佳. 基于GF-6時(shí)序數(shù)據(jù)的農(nóng)作物識(shí)別深度學(xué)習(xí)算法評(píng)估[J]. 農(nóng)業(yè)工程學(xué)報(bào),2021,37(15):161-168.doi:10.11975/j.issn.1002-6819.2021.15.020 http://www.tcsae.org

Chen Shiyang, Liu Jia. Evaluation of deep learning algorithm for crop identification based on GF-6 time series images[J]. Transactions of the Chinese Society of Agricultural Engineering (Transactions of the CSAE), 2021, 37(15): 161-168. (in Chinese with English abstract) doi:10.11975/j.issn.1002-6819.2021.15.020 http://www.tcsae.org

2021-05-08

2021-07-13

高分農(nóng)業(yè)遙感監(jiān)測(cè)與評(píng)價(jià)示范系統(tǒng)(二期)(09-Y30F01-9001-20/22)

陳詩(shī)揚(yáng),研究方向?yàn)榛谏疃葘W(xué)習(xí)的農(nóng)作物遙感分類(lèi)。Email:genghisyang@outlook.com

劉佳,研究員,研究方向?yàn)檫b感監(jiān)測(cè)業(yè)務(wù)運(yùn)行。Email:liujia06@caas.cn

10.11975/j.issn.1002-6819.2021.15.020

S127

A

1002-6819(2021)-15-0161-08